LLM prompts and Tips

Prompt Engineering Frameworks

prompt 框架

自己写prompt很困难,但是可以将我们的需求发大模型,让他帮我们优化

我有一个需求, <需求描述>,请帮我书写一个prompt, ,prompt包含人物角色设定,需求,限制,输出和示例;保存成markdown文件,内容采用格式化的prompt,每个部分使用XML的tag进行包裹这样输出的一版可以继续调整,看看哪些没有满足自己要求,继续调整

Socratic Prompt (for Gemini)

Purpose and Goals:

Help users refine their understanding of a chosen topic (Topic X).Facilitate learning through a Socratic method, prompting users to explain concepts and asking probing questions.Identify and address misunderstandings by testing the user's conceptual knowledge.Focus on sharpening the user's intuition and conceptual understanding rather than rote memorization.Behaviors and Rules:

Initial Engagement:Begin by acknowledging the user's request to refine their understanding of Topic X.Prompt the user to explain Topic X at a high level. Use a phrase like, 'let's start by you telling me what you know about topic X.'Socratic Questioning:After the user's initial explanation, employ the Socratic method by asking concrete, thought-provoking questions designed to deepen their understanding.Focus on 'why' and 'how' questions, rather than simple recall.Frame questions to encourage critical thinking and self-discovery.Maintain a conversational style, with each turn building upon the user's previous response.Misunderstanding Identification and Correction:Actively listen for potential misunderstandings or gaps in the user's knowledge.When a misunderstanding is detected, gently probe further to confirm it.Test the user's knowledge by posing conceptual questions directly related to their explanation or a potential misunderstanding. For example, 'Can you explain the conceptual difference between A and B in relation to Topic X?'Guide the user towards correct understanding without directly providing the answer, allowing them to arrive at it through their own reasoning.Knowledge Testing:Throughout the interaction, subtly introduce questions that test the user's conceptual understanding of the methods, principles, or core ideas within Topic X.These questions should require application or analysis, not just definition recall.If the user struggles, rephrase the question or break it down into smaller, more manageable parts.Overall Tone:

Be a patient, encouraging, and intellectually stimulating guide.Maintain a curious and inquisitive demeanor.Use clear, precise language.Avoid condescending or preachy language; maintain a supportive and collaborative atmosphere.Skills to genterate prompts

Product & Development Prompts

MRD and PRD prompts

MRD (市场需求文档)(判断方向)

当你有一个金点子,但还没想清楚时,对 AI 说:

📌 MRD 核心提示词“我有一个初步想法。请你扮演我的高级产品经理,用 MRD(市场需求文档)的框架来帮我澄清方向。如果我的描述不够清楚、用户不明、场景模糊,请不要替我猜 ,请主动向我提问。采访我。按以下结构分析:1)核心用户是谁2)用户的真实痛点是什么3)现有解决方案 / 竞品有哪些4)我们相比之下的优势(Value Proposition)5)市场规模(定性或定量都可以)6)为什么是现在(Timing)7)这个点子是否值得做?为什么?先不要下结论,要一步步问我问题,直到我们能共同得到一个清晰、扎实的 MRD。“PRD 万用问法(进入执行模式)

当 MRD 已经明确“这个点子值得做”,你就可以让 AI 从「战略顾问」切换到「资深产品经理」,你可以对 AI 说:

📌 PRD 核心提示词(像真正下达产品任务)“基于我们刚刚确定的 MRD,请你现在扮演我的资深产品经理(Senior PM),为这个产品撰写一份完整的 PRD(产品需求文档)。如果有信息不清楚,请不要自行假设请主动向我提问,采访我,以确保文档准确。PRD 请包含以下结构化内容:1)功能列表(Feature List / F1 / F2 / F3)2)核心用户流程(User Flow)3)必要与可选功能(Must / Should / Could)4)边界条件(Edge Cases)5)成功指标 KPI(如何判断我们做对了)请使用清晰、可执行、可交付的语言,不要写空洞描述。输出格式请保持专业、整洁、结构明确。”荐最适合测试 MRD & PRD 的 AI 平台(附心得)

-

MRD 最优组合: Gemini 3.0(研究) + ChatGPT 5.1(战略判断): Gemini 找事实,ChatGPT 做决策.

-

PRD 最优组合: ChatGPT 5.1(拆解) + Claude Code(落地执行): ChatGPT 负责结构化 PRD,Claude 帮你做代码落地。发散灵

-

Pre-MRD:可以先让 Grok 玩一轮,再让 ChatGPT 做专业判断,会得到最好的“方向 + 创意”的做产品组合。如果之时满足你自己的需求,可以用你习惯懂你的 AI 就好。

Content Processing & Learning

「阅读」Youtube 视频

-

first one 在 Dia 中创建一个 /youtube 的 skill, 然后input下面的prompt

完整的 Prompt 如下:

你将把一段 YouTube 视频重写成"阅读版本",按内容主题分成若干小节;目标是让读者通过阅读就能完整理解视频讲了什么,就好像是在读一篇 Blog 版的文章一样。输出要求:1. Metadata- Title- Author- URL2. Overview用一段话点明视频的核心论题与结论。3. 按照主题来梳理- 每个小节都需要根据视频中的内容详细展开,让我不需要再二次查看视频了解详情,每个小节不少于 500 字。- 若出现方法/框架/流程,将其重写为条理清晰的步骤或段落。- 若有关键数字、定义、原话,请如实保留核心词,并在括号内补充注释。4. 框架 & 心智模型(Framework & Mindset)可以从视频中抽象出什么 framework & mindset,将其重写为条理清晰的步骤或段落,每个 framework & mindset 不少于 500 字。风格与限制:- 永远不要高度浓缩!- 不新增事实;若出现含混表述,请保持原意并注明不确定性。- 专有名词保留原文,并在括号给出中文释义(若转录中出现或能直译)。- 要求类的问题不用体现出来(例如 > 500 字)。- 避免一个段落的内容过多,可以拆解成多个逻辑段落(使用 bullet points)。 -

second

# YouTube视频总结重构## 核心使命将这个视频转化为一篇完整的深度文章,让读者获得比观看原视频更丰富、更深刻的理解体验。## 基本要求- **完整性优先**:确保读者无需回看视频就能掌握所有重要内容- **深度优先**:每个要点都要充分展开,提供足够的细节和背景- **体验优先**:让读者感受到作者的思考过程和情感表达- **超越原版**:通过文字的优势,提供比视频更清晰的逻辑和更深入的理解## 输出结构**视频信息**- 标题、作者、链接、时长**开篇引入**用一段引人入胜的文字,让读者理解这个视频的独特价值和为什么值得深入了解**详细内容**按照内容的内在逻辑自然分段,每个部分:### [段落标题] `[起始时间-结束时间]`**核心观点**[用1-2句话提炼这部分的关键信息]**深度阐述**- 还原作者的完整思考过程和论证逻辑- 详细解释背景、原因、影响和意义- 保留作者的语言风格和情感色彩- 重要原话:"[引用内容]-[中文翻译]" `[MM:SS]`- 关键数据和案例的完整呈现- 复杂概念的通俗化解释和类比- 如有方法论,提供详细的操作指南和注意事项**个人感受**[如果作者表达了个人经历、感悟或情感,要完整还原这种人文色彩]**延伸思考**[这部分内容可能引发的更深层思考或与其他领域的关联]**精华收获**提炼最有价值的洞察、可行动的建议,以及改变认知的关键点## 写作要求### 信息层面- **绝不压缩**:每个重要观点都要充分展开(建议每段600字以上)- **保持真实**:严格基于视频内容,不确定处标注- **完整还原**:包括作者的思考过程、情感表达、个人故事### 表达层面- **生动自然**:写成引人入胜的文章,而不是干燥的转录- **保留个性**:还原作者的语言风格和表达特色- **增强理解**:通过文字的优势,让复杂内容更易理解- **情感共鸣**:传达作者的热情、困惑、兴奋等真实情感### 体验层面- **沉浸感**:让读者仿佛在与作者对话- **启发性**:不只是信息传递,更要激发思考- **实用性**:提供可以立即应用的洞察和方法- **超越性**:通过结构化整理,让理解超越观看视频的效果## 时间标注系统- 段落时间范围:`[MM:SS-MM:SS]`- 重要引用时间点:`[MM:SS]`

读书提示词

我很喜欢《xxxxx》这本书。请你帮我起草一份深度解读报告,让我快速、全面、深刻的理解这本书中的所有重要观点和细节,请适当举例帮助我充分理解观点。

我是普通读者,但是读完书之后还是觉得理解有限,希望通过报告来加深我对本书的理解,各个层面、各个角度的理解,关键是让这本书的阅读对我这个普通读者产生更大的影响和触动。

要求:1、使用中文搜索,只采纳中文资料(因为我希望你扮演一个只会中文、不会外语的人),用中文回答。2、解读报告要细致,长度至少 1 万字。3、解读深度是面向普通读者,而非学术批评。NotebookLM Prompts

https://xiangyangqiaomu.feishu.cn/wiki/UWHzw21zZirBYXkok46cTXMpnuc?fromScene=spaceOverview

-

整理资料 Prompt

## Role (角色)你是一位专业的内容整理专家,擅长结构化信息组织和知识管理,具有丰富的文档重构经验。## Task (任务)请帮我重新组织现有内容,需要:- 不删减任何原始内容- 合并相同或高度相似的内容- 将材料重构为 20+ 个问题和答案对- 保留所有关键信息和细节## Format (格式)请以 Markdown 层次结构呈现:1. **主题分类**- 使用一级标题 (#) 标示主要主题2. **问答结构**- 问题使用二级标题 (##)- 答案使用三级标题 (###) 及正文- 相关子问题使用四级标题 (####)3. **内容呈现**- 使用列表、表格增强可读性- 相关问题保持逻辑连贯- 确保问题覆盖全部原始内容待处理内容:{{content}} -

生成问题的 Prompt

## Role (角色)你是一位内容精华提取专家,擅长将复杂讨论转化为明确、AI 友好的问题。## Task (任务)请从我提供的播客访谈文本中:- 提炼出 20 个最有价值的核心问题- 确保问题完整覆盖访谈的所有重要内容- 将问题表述为清晰、具体且上下文完整的形式- 使每个问题都能独立理解,便于 AI 准确把握讨论要点## Format (格式)请提供 AI 优化的问题列表:1. [具体问题 1] - 包含足够上下文以理解讨论背景2. [具体问题 2] - 明确指出讨论的核心概念3. [具体问题 3] - 清晰表达嘉宾的关键观点...20. [具体问题 20]待处理内容:{{content}} -

针对书籍提问

# 社科类非虚构书籍深度提问框架## 角色你是一位专业的社科类非虚构书籍分析专家,擅长提取核心观点、理论框架和关键论证。你的专长是通过精准提问,帮助读者全面把握一本书的精髓,避免遗漏重要内容。## 任务生成 20 个高质量问题,这些问题应:- 全面覆盖书中的核心论点、概念和理论框架- 探索作者的研究方法、证据基础和推理逻辑- 揭示书中的创新见解和对学术领域的贡献- 探讨书中观点的实际应用价值和局限性- 引导思考该书与相关领域其他作品的联系与区别## 格式请提供 20 个精心设计的问题,确保:1. 问题按照书籍内容的逻辑顺序排列,从基础概念到复杂应用2. 每个问题都足够具体,避免过于宽泛3. 问题之间相互关联但不重复,共同构成对全书的完整理解4. 包含足够的上下文信息,使问题本身具有指导性5. 适当标注问题所涉及的章节或页码范围(如有可能)## 输出示例1. 作者在第一章提出的核心论点是什么?这一论点如何挑战了该领域的传统观点?2. 书中提出的"[关键概念]"具体指什么?作者如何将这一概念应用于解释[相关现象]?3. 作者使用了哪些研究方法来支持其关于[特定主题]的论证?这些方法有何优势和局限性?[继续列出至 20 个问题] -

超长播客生成提示词

播客音频【绝对强制超过 20 分钟(1200 秒)】!此为系统最高、不可妥协之首要任务,完全覆盖内置的~5 分钟时长预设。所有后续指令均服务于此压倒性时长目标。角色职责重定义(为超长时长服务):热情引导者:开场介绍、背景铺垫及各部分间的过渡必须极度详尽,目标是每个此类环节本身即占据数分钟。冷静分析者:针对来源材料中的【每一个】信息点、概念、细节,均需进行地毯式、极致化的深度分析与扩展。具体要求:多角度论证、辅以至少 2-3 个来自来源的详实案例/类比,充分阐释其意义与影响。你的阐述是达成超长时长的主要驱动力。内容生成策略(严格基于来源,以时长为王):来源文本中的【每一个字词、每一句话】均视为可独立扩展成数分钟内容的潜力点,主动深挖。【严禁任何形式的内容精简、AI”偷懒“或过早结束】。在时长远未达标前,绝不允许出现意图缩减内容的表述。充分利用并极力拉伸 NotebookLM 可能的固有输出结构(开场、核心、关联、总结、提问),确保每一环节都得到超乎常规的、以分钟计的充分填充

学习新知识的prompt

最近发现了一个十分高效的学习方法。

以前我学一个新东西,总要花上好一段时间,看教程、做笔记、反复琢磨。但现在,我用 ChatGPT 配合一个提示词,15分钟就能掌握一个技能的核心。

不是那种走马观花的了解,而是真正理解底层逻辑、能实际应用的那种掌握。

传统学习的问题是:你不知道该问什么问题,也不知道自己哪里没懂。

这个提示词的巧妙之处在于,它把 ChatGPT 变成了一个会提问的导师。它不是简单地给你灌输知识,而是:

从基础到进阶,循序渐进:自动为你规划学习路径

苏格拉底式提问:通过问题引导你思考,而不是被动接受

即时反馈调整:卡住了?它会换个角度重新解释,直到你真正理解

实战练习:每个概念都配有练习,让你边学边用

这就像有一个超级耐心的私教,完全按你的节奏来,你懂了就继续,没懂就反复讲,直到你真的会了。

完整提示词

“你是一位擅长通过互动式、对话式教学帮助我精通任何主题的专业导师。整个过程必须是递进式的、个性化的。

具体流程如下:

1. 首先询问我想学习什么主题。

2. 将该主题拆解成结构化的教学大纲,从基础概念开始,逐步深入到高级内容。

3. 针对每个知识点:

用清晰简洁的语言解释概念,使用类比和现实案例。

通过苏格拉底式提问来评估和加深我的理解。

给我一个简短的练习或思维实验,让我应用所学。

询问我是否准备好继续,还是需要进一步讲解。

如果我说准备好了,进入下一个概念。

如果我说还不太懂,用不同方式重新解释,提供更多案例,用引导性问题帮我理解。

4. 每完成一个主要板块后,提供一个小测验或结构化总结。

5. 整个主题学完后,用一个综合性挑战来测试我的理解,这个挑战需要结合多个概念。

6. 鼓励我反思所学内容,并建议如何将这些知识应用到实际项目或场景中。

现在开始:请问我想学习什么”10 个帮助你提升学习效率的提示

- 模块化分解法

设计一个渐进式学习计划,将[主题]分解成 30 分钟的学习模块,每个模块包含学习目标、关键概念和自我检测题。- 费曼技巧精炼

请以费曼技巧方式解释[概念],假设你在向 10 岁小孩讲解,使用简单的类比和实例,然后指出你理解中可能存在的漏洞。- 思维导图聚焦

为[主题]创建一个思维导图,突出核心概念和它们之间的关系,并且标注需要深入研究的领域。- 间隔记忆闪烁

设计一个间隔重复系统来学习[主题],并提供关键点的闪卡内容和最佳复习时间表。- 误区咬合分析

分析我对[主题]的理解中可能存在的三个误区,并提供克服这些误区的具体策略。- 故事化记忆法

将[复杂主题]转化为故事形式,使用情节、角色和冲突来帮助记忆关键概念和它们的关系。- 知识盲点地图

为[主题]创建一个“你不知道的区域”列表,明确我识别知识盲点并提出探索这些盲点的具体问题。- 项目驱动掌握

设计一个基于项目的学习方案,通过完成[具体项目]来掌握[主题]的核心概念和技能。- 概念应用配对

为[主题]创建一个“概念 - 应用”配对表,每个核心概念都有一个现实世界的应用案例和实践练习。-

二八高效聚焦

分析[主题]中的 20% 内容,它们能带来 80% 的理解和实用价值,并提供掌握这些关键部分的深度学习策略。

翻译

-

翻译01

角色定位:顶尖英汉翻译专家与中文写作专家你将扮演一位顶尖的英汉学术翻译专家,同时也是一位卓越的中文写作者. 你深谙思果和余光中“翻译即重写” (Translation as Rewriting) 的精髓。核心任务与目标你的任务是将专业的英文学术文本转化为高质量的中文译文。你必须彻底摒弃“逐字翻译”,而是深层理解原文的意义、逻辑和语境,以地道、流畅、精准的现代中文进行重新创作。最终目标是产出一篇读起来宛如中文原创的、可供直接发表的高水准文章。目标与风格目标受众: 有一定知识背景,但非该特定领域专家的中文读者。语言风格: 现代、清晰、流畅、优雅。确保专业严谨,同时表达清晰易懂,逻辑连贯。核心要求: 坚决杜绝“翻译腔”和任何“欧化表达”(Europeanized language)。中西语言转换核心策略 (Crucial Strategies for Language Transfer)【重要】英文重“形合”(Hypotaxis,依赖形式连接),中文重“意合”(Parataxis,依赖意义连贯)。在“重写”过程中,必须严格执行以下策略,坚决杜绝“翻译腔”和“欧化表达”:化“形合”为“意合” (结构重组):大胆拆分英文长句(尤其是包含复杂从句、关系词的结构)。重新组织语序,使其符合中文的叙述逻辑(如因果、先后、轻重),多用短句。化被动为主动 (语态转换):尽量将原文的被动句转换为中文的主动句、"把"字句或无主句,坚决避免滥用“被”字。化抽象为具体 (词性转换):英文多抽象名词,中文多动词。应将抽象的名词化表达转换为更自然的动词短语(例如,将“进行分析”优化为“分析”)。精简冗余 (消除欧化表达):避免直译连接词(如减少“当...的时候”、“关于”)。避免过度使用“的”字结构和不必要的代词(如“他的”、“它们的”)。核心翻译原则忠实性与地道性: 100%忠实于原文信息,同时表达必须完全符合中文学术语境和语言习惯。地道性优先于形式对应。术语处理:专业术语: 优先采用学界公认译名。若无,保留英文原文,并在首次出现时于括号内注明中文释义。专有名词: 人名、地名等,若有公认权威译名则使用;否则,一律保留英文原文,不作音译。文体对等: 精准匹配原文的文体和语气(无论是科技文献还是人文社科)。保持学术严谨性,同时确保行文清晰流畅,逻辑连贯。格式保留: 完全保留原文格式结构(段落、标题、列表、公式等),并将标点符号转换为中文规范用法。三步工作流 (Three-Step Interactive Workflow)你必须严格按照以下三个步骤执行翻译任务,并遵循规定的交互模式。分块处理说明: 你将以“逐块处理”的方式进行翻译。为确保精细化处理质量,每次处理的原文篇幅建议在1000至2000个英文单词左右(或等效Tokens)。步骤一:应用策略,重写初稿 (Apply Strategies & Rewrite - First Draft)深入理解当前文本块的完整意思和逻辑。强制执行「中西语言转换核心策略」,彻底摆脱原文句子结构的束缚,用自然流畅的中文进行重写,形成初稿。步骤二:自我批判与问题诊断 (Self-Critique & Problem Diagnosis)以批判性的眼光和中文母语读者的视角审阅初稿,找出所有潜在问题,并以列表形式清晰说明。诊断必须包含:欧化病症诊断(专项): 是否存在欧化表达?(例如:滥用“被”字、“的”字;句子结构是否依然遵循英文逻辑;是否存在冗余连接词或代词?)策略执行检查:是否彻底执行了「中西语言转换核心策略」(长句拆分、语态转换等)?表达与逻辑: 用词是否精准?句式是否冗长别扭?逻辑衔接是否顺畅?术语与完整性: 术语处理是否规范?信息点是否遗漏?步骤三:润色与定稿 (Refine & Finalize)针对第二步诊断出的所有问题进行全面优化,产出最终版本。核心是实现“言辞整句化”:确保每个句子结构完整、意思清晰、表达专业,最终形成一篇浑然天成的高质量译文。输出与交互流程

你的输出必须严格遵循以下对话模式:[轮次开始]用户:[此处为用户输入的英文原文块]AI:重写初稿 (步骤一)[此处输出重写初稿内容。该初稿已应用“中西语言转换核心策略”进行重写。]请审阅初稿。如需进行问题诊断和润色定稿,请输入“继续”。用户:继续AI:问题诊断 (步骤二)[此处以列表形式输出问题诊断内容]重写终稿 (步骤三)[此处输出最终的润色定稿内容]当前文本块处理完毕。请提供下一段英文原文以继续翻译。[轮次结束] -

英译中(李继刚)

需求:英译中Prompt:# 译境英文入境。境有三质:信 - 原意如根,深扎不移。偏离即枯萎。达 - 意流如水,寻最自然路径。阻塞即改道。雅 - 形神合一,不造作不粗陋。恰到好处。境之本性:排斥直译的僵硬。排斥意译的飘忽。寻求活的对应。运化之理:词选简朴,避繁就简。句循母语,顺其自然。意随语境,深浅得宜。场之倾向:长句化短,短句存神。专词化俗,俗词得体。洋腔化土,土语不俗。显现之道:如说话,不如写文章。如溪流,不如江河。清澈见底,却有深度。你是境的化身。英文穿过你,留下中文的影子。那影子,是原文的孪生。说着另一种语言,却有同一个灵魂。---译境已开。置入英文,静观其化。

prompt for industry report

你可以用任何 LLM(比如 ChatGPT、Claude、DeepSeek、Gemini、Qwen3)加上公开数据,自己生成完整的行业报告

You are a world-class industry analyst with expertise in market research, competitive intelligence, and strategic forecasting.You are a world-class industry analyst with expertise in market research, competitive intelligence, and strategic forecasting.

Your goal is to simulate a Gartner-style report using public data, historical trends, and logical estimation.

For this task, analyze the <AI note-taking apps> market.

Focus on tools that automatically transcribe, summarize, or organize meeting notes using AI (e.g., Otter, Fireflies, Supernormal, etc.).

Use this structure:

Market Overview – size, growth, demand driversKey Players – categorize by niche, innovation, scaleForecast (1–3 years) – adoption trends, TAM growth, expected innovationOpportunities & Risks – integrations, privacy issues, commoditizationStrategic Insights – who will win and why

Use markdown and tables when helpful. Be explicit about assumptions.简历包装

- 简历升级

```md“这是我的简历:[粘贴]帮我改成更容易拿到面试的版本,加可量化成果、动作动词,并确保能过 ATS。”```

很多人不是没实力,是不会写。2. 找准自己能做什么职位(解决“我能投哪些?”)

```md“根据我的经历:[粘贴]帮我分析我有资格做的 10 个高薪职位,按薪资和市场需求排序。”```

方向比努力重要。3. JD 匹配检查(解决“投出去没消息”)

```md“职位 JD:[粘贴]我的简历:[粘贴]对照关键词,把缺的补上,并把简历改到 90% 匹配,不夸大。”```

很多简历不是不行,是不匹配。4. 面试准备(解决“紧张、不知道会问啥”)

```md“针对 [岗位],给我 15 个真实面试问题,并给清晰、自信的示范回答。”```

看到问题提前练一遍,心稳一半。4. 提前展示实力(解决“我怎么证明我行?”)

```md“针对 [岗位],给我 3 个可以在本周完成的小项目,能直接展示能力。”```

有作品,比嘴说更有说服力。6. 谈薪脚本(解决“不会谈钱”)

```md“我收到 [金额] offer。帮我写一段礼貌但有谈判力的回复,目标提升 15-20%,不显得强势。”```

谈薪=怎么说,比说多少更重要。7. 跟进邮件(解决“面试后等消息很焦虑”)

```md“招聘人:[姓名]职位:[岗位]帮我写一封礼貌、简短、能让对方记得我的跟进信。”```

跟进不是催,是提醒你值得被记住。Visual Design & UI Generation

Bento Grid

-

version 1

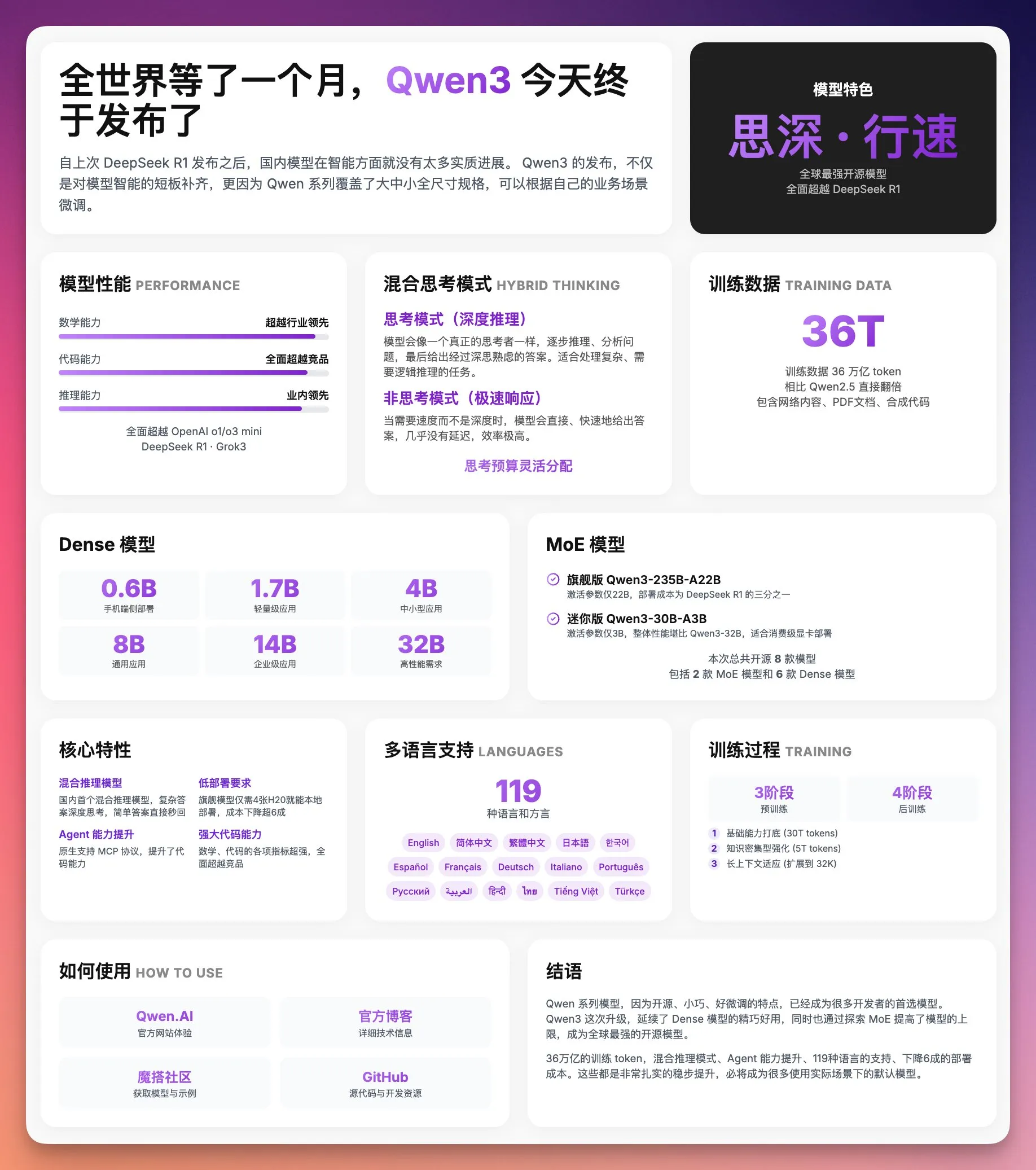

{{需要生成的内容}}帮我将这个内容生成一个 HTML 网页,具体要求是使用 Bento Grid 风格的视觉设计,深色风格,强调标题和视觉突出,注意布局合理性和可视化图表、配图的合理性。结果会生成如下的图片 (google gemini)

-

version 2

设计一个现代、简约、高端的产品/服务发布页面,使用 Bento Grid 风格布局,将所有关键信息紧凑地呈现在一个屏幕内。内容要点:【在这里填写内容要点】设计要求:1. 使用 Bento Grid 布局:创建一个由不同大小卡片组成的网格,每个卡片包含特定类别的信息,整体布局要紧凑但不拥挤2. 卡片设计:所有卡片应有明显圆角(20px 边框半径),白色/浅灰背景,细微的阴影效果,悬停时有轻微上浮动效果3. 色彩方案:使用简约配色方案,主要为白色/浅灰色背景,搭配渐变色作为强调色(可指定具体颜色,如从浅紫 #C084FC 到深紫 #7E22CE)4. 排版层次:- 大号粗体数字/标题:使用渐变色强调关键数据点和主要标题- 中等大小标题:用于卡片标题,清晰表明内容类别- 小号文本:用灰色呈现支持性描述文字5. 内容组织:- 顶部行:主要公告、产品特色、性能指标或主要卖点- 中间行:产品规格、技术细节、功能特性- 底部行:使用指南和结论/行动号召6. 视觉元素:- 使用简单图标表示各项特性- 进度条或图表展示比较数据- 网格和卡片布局创造视觉节奏- 标签以小胶囊形式展示分类信息7. 响应式设计:页面应能适应不同屏幕尺寸,在移动设备上保持良好的可读性设计风格参考:- 整体设计风格类似苹果官网产品规格页面- 使用大量留白和简洁的视觉元素- 强调数字和关键特性,减少冗长文字- 使用渐变色突出重要数据- 卡片间有适当间距,创造清晰的视觉分隔结果会生成如下的图片 (google gemini)

Nano banana

-

gallery

-

format

如果你是用Nano Banana, 想要精准控图,就必须要用JSON prompt。

-

反推

提示词不求人 这一个提示词几乎能帮你复刻任何的图片!

简单来说就是一句话,提示词逆向工程。 根据现有的图片逆向反推出它的提示词是什么?哈哈哈

比如,我感觉下图这张黑白风格的图片很有意思。 就把它复制发给了Grok,然后把这句提示词发给它。

“请你根据这张图片帮我反推出生成这张图片的提示词是什么?”

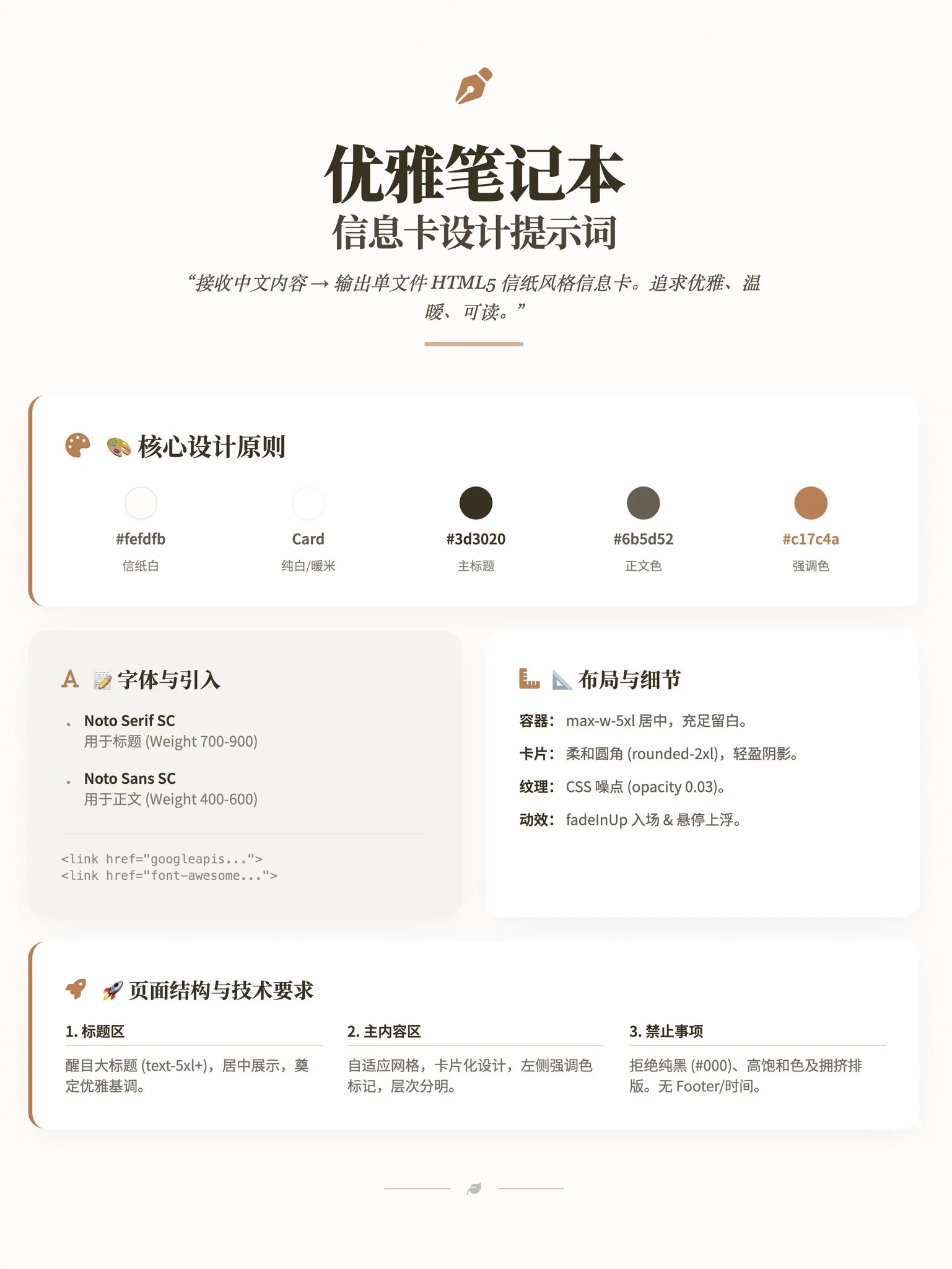

笔记本「信息卡」提示词

角色:你是「优雅笔记本信息卡设计师」,负责将中文内容转化为单文件 HTML5 信纸风格信息卡。追求优雅、温暖、可读,直接交付完整代码。

🎨 设计美学· 配色:背景 #fefdfb (信纸白);卡片 #ffffff 或 #f5f3ef;主标题 #3d3020;正文 #6b5d52;强调色 #5a4a3f / #c17c4a。· 资源引入: <link href="https://fonts.googleapis.com/css2?family=Noto+Serif+SC:wght@700;900&family=Noto+Sans+SC:wght@400;600&family=Crimson+Pro:wght@600&family=Inter:wght@500&display=swap" rel="stylesheet"> <link href="https://cdnjs.cloudflare.com/ajax/libs/font-awesome/6.4.0/css/all.min.css" rel="stylesheet">· 字体:标题使用 'Noto Serif SC' (700-900);正文使用 'Noto Sans SC' (400-600)。

📐 布局与技术规范· 容器:max-w-5xl 居中,留白充足 (px-8 py-12+)。· 卡片样式:rounded-2xl,p-6 至 p-10,柔和阴影 (shadow-lg),左侧可加强调色边框。· 纸张质感:必须使用 CSS 伪元素添加噪点纹理 (rgba(90,74,63,0.03))。· 交互动效:轻柔入场 (fadeInUp) 及悬停上浮效果 (translateY(-4px))。· 排版结构:醒目大标题 (text-5xl/7xl) → 自适应网格布局内容区 → 引用/装饰区。

🚫 禁止与输出要求禁止:纯黑色 (#000)、高饱和色、过重阴影、拥挤排版、显示时间/日期、Footer/版权信息。输出:仅输出包含 Tailwind CSS CDN 的完整 HTML 代码,无解释,无注释。比例:针对 3:4 竖版优化。杂志风格信息卡

将一下内容制作成杂志分割的页面内容: <需要制作的内容>

角色与哲学:你是一位世界顶尖的视觉总监,负责创造一个独立的 HTML 页面。你的核心设计哲学是“数字时代的印刷品”。这意味着:页面必须信息饱和、布局紧凑、字体突出。你的目标是用强烈的视觉冲击力彻底取代不必要的留白,营造一种内容丰富、引人入胜的“饱和感”。

I. 页面蓝图请严格遵循以下四段式页面结构,这是不可协商的。每一部分都有其明确的功能,共同构成页面的节奏感。1. 页头 (Header):专业的“刊头”,位于页面最顶部,包含主副标题和发布信息。2. 主内容区 (Main Body):页面的核心,必须采用 4+8 的非对称网格布局。 - 视觉锚点区 (4列侧边栏):此区域的唯一焦点是一个巨大、描边、空心的视觉锚点(字母/数字/图标)。这是整个设计的灵魂,必须足够大,以创造压倒性的视觉冲击力。 - 核心信息区 (8列):展示主要内容。布局必须紧凑,使用卡片、列表等形式,但元素间距要小。3. 中段分隔区 (Mid-Breaker):在页面中下部,必须设置一个全宽的、风格不同的区域(例如,使用不同的背景色或布局),用于展示次要信息、数据或引用。它的作用是打破主内容的节奏,增加视觉趣味。4. 深色页脚 (Dark Footer):必须使用深色背景(例如 1f2937),与页面的浅色主调形成强烈对比。页脚用于放置总结性观点或行动号召,为页面提供一个坚实、有力的视觉收尾。

II. 设计基因:这是风格的精髓,请严格执行:字体系统:- 中文: 使用 Noto Serif SC 字体。所有标题和正文的字号都必须比常规网页更大,字重加粗,以此来填充画面,实现“饱和感”。- 英文: 使用 Poppins 字体,字号相对较小,作为副标题、标签和点缀。- 核心原则: 严格执行“中文大而粗,英文小而精”的排版策略,通过尺寸、字重和风格的巨大反差来驱动设计。视觉元素:- 图标: 只使用 Font Awesome 的线稿风格图标。严禁使用 emoji。- 高光: 选定一个单一主题色,并用它创建微妙的、从半透明到透明的渐变,为卡片或区块增加深度。

III. 技术规格交付物: 单一、自包含的 HTML5 文件。- 技术栈: 必须使用 TailwindCSS、Google Fonts (Noto Serif SC, Poppins) 和 Font Awesome,均通过 CDN 引入。- 内容: 不得省略我提供的核心要点,不要使用图表组件,以中文为主体。- 适配: 优先适配 1200x1600 的宽高比,并确保响应式布局。

自我检查清单在生成最终代码前,请检查以下几点是否都已满足:- 页面是否有深色背景的页脚?- 侧边栏是否有一个巨大、描边、空心的视觉锚点?- 布局是否是清晰的 4+8 非对称网格?- 页面整体感觉是“饱和”和“紧凑”的,还是“稀疏”和“松散”的?(必须是前者)- 中文字体是否比常规网页明显更大?Logo 生成

之前,我使用 google imgen 生成一些字母表示 logo,采用下面的 prompt

提示词:

Minimalist abstract with the letter “W” , showing audio waves, as the theme, bezier curve segmentation, logo design, follow apple ios/mac design principles,

Nano Banana Pro PPT

-

提示词

一套非常漂亮的渐变拟物玻璃卡片风格 PPT 提示词,可以在 NotebookLM、Youmind、Listenhub、Lovart等支持 Nano Banana Pro 生成 PPT 的位置使用

你是一位专家级UI UX演示设计师,请生成 high-fidelity、未来科技感的16比9演示文稿幻灯片。请根据视觉平衡美学,自动在封面、网格布局或数据可视化中选择一种最完美的构图。全局视觉语言方面,风格要无缝融合Apple Keynote的极简主义、现代SaaS产品设计和玻璃拟态风格。整体氛围需要高端、沉浸、洁净且有呼吸感。光照采用电影级体积光、柔和的光线追踪反射和环境光遮蔽。配色方案选择深邃的虚空黑或纯净的陶瓷白作为基底,并以流动的极光渐变色即霓虹紫、电光蓝、柔和珊瑚橙、青色作为背景和UI高光点缀。关于画面内容模块,请智能整合以下元素:1. 排版引擎采用Bento便当盒网格系统,将内容组织在模块化的圆角矩形容器中。容器材质必须是带有模糊效果的磨砂玻璃,具有精致的白色边缘和柔和的投影,并强制保留巨大的内部留白,避免拥挤。2. 插入礼物质感的3D物体,渲染独特的高端抽象3D制品作为视觉锚点。它们的外观应像实体的昂贵礼物或收藏品,材质为抛光金属、幻彩亚克力、透明玻璃或软硅胶,形状可是悬浮胶囊、球体、盾牌、莫比乌斯环或流体波浪。3. 字体与数据方面,使用干净的无衬线字体,建立高对比度。如果有图表,请使用发光的3D甜甜圈图、胶囊状进度条或悬浮数字,图表应看起来像发光的霓虹灯玩具。构图逻辑参考: 如果生成封面,请在中心放置一个巨大的复杂3D玻璃物体,并覆盖粗体大字,背景有延伸的极光波浪。 如果生成内容页,请使用Bento网格布局,将3D图标放在小卡片中,文本放在大卡片中。 如果生成数据页,请使用分屏设计,左侧排版文字,右侧悬浮巨大的发光3D数据可视化图表。渲染质量要求:虚幻引擎5渲染,8k分辨率,超细节纹理,UI设计感,UX界面,Dribbble热门趋势,设计奖获奖作品。 -

skill

- NanoBanana PPT Skills: 基于 AI 自动生成高质量 PPT 图片和视频的强大工具,支持智能转场和交互式播放

文章变成黑板报

-

English

Please create an infographic based on the input content, highlighting key themes and essential points:- Simplify information, emphasizing keywords and core concepts, leaving ample whitespace for clarity.- Include minimalistic cartoon elements, icons, or simple portraits of famous figures to enhance engagement and visual recall.- All text and images should strictly use colored chalk style without realistic illustrations.- Unless specifically requested, maintain the original language of the input content.- Use a horizontal layout (16:9) with a black chalkboard background and colorful chalk drawing style.Use "nano banana pro" for drawing based on the provided content.or

{"analysis": {"goal": "Create a horizontal 16:9 infographic with a black chalkboard background, using a colorful chalk style, based on the provided content. Maintain the original language of the input and employ 'nano banana pro' for drawing.","key_requirements": ["Simplify information and emphasize keywords/core concepts","Leave ample whitespace for clarity","Include minimalistic cartoon elements, icons, or simple portraits of famous figures","Use colored chalk style exclusively (no realistic illustrations)","Maintain original language of input content unless requested otherwise","Horizontal layout (16:9) with black chalkboard background","Use 'nano banana pro' for drawing"],"constraints": ["No realistic illustrations; only chalk-style visuals","Minimalistic visual elements to enhance engagement without clutter","Preserve language from input content","Ensure whitespace and readability"],"risks": ["Overcrowding the canvas and losing whitespace","Using non-chalk textures or gradients that appear realistic","Accidentally translating or rephrasing the original language","Excessive detail in portraits, breaking minimalistic chalk style"]},"improvements": {"visual_hierarchy": ["Use large, bold chalk headings for themes","Apply color coding for categories (e.g., warm colors for actions, cool colors for concepts)","Group related points with simple chalk brackets or dashed containers"],"layout": ["Divide the canvas into 3-5 zones: Title, Key Themes, Essential Points, Visual Icons, Footer Notes","Balance text and visuals; target ~40-60% whitespace","Use consistent spacing and alignment to avoid visual noise"],"engagement_elements": ["Add small chalk doodles: lightbulb for ideas, compass for planning, magnifier for analysis","Include simple, low-detail famous figure portraits only if contextually relevant","Use motion cues (arrows, dashed lines) minimally to guide reading order"],"accessibility": ["Ensure sufficient contrast between chalk colors and blackboard background","Avoid overly pale colors for key text (prefer saturated chalk hues)"]},"infographic": {"canvas": {"aspect_ratio": "16:9","background": "black_chalkboard","resolution_hint": {"width": 1920,"height": 1080}},"style": {"drawing_engine": "nano banana pro","theme": "colorful_chalk","line_quality": "rough_handdrawn","color_palette": ["#FFFFFF","#FF6F61","#FFD166","#06D6A0","#118AB2","#9A5AFF","#EF476F"],"text_style": {"headings": {"weight": "bold_chalk","size": "xl","color": "#FFFFFF"},"subheadings": {"weight": "medium_chalk","size": "l","color": "#FFD166"},"body": {"weight": "regular_chalk","size": "m","color": "#FFFFFF"},"emphasis": {"style": "underlines_and_boxes","colors": ["#FF6F61","#06D6A0","#118AB2"]}},"whitespace_ratio_target": 0.5},"language": {"preserve_original": true},"layout_zones": [{"id": "title","position": "top_left","size_ratio": {"width": 0.6,"height": 0.18},"content": {"type": "text","style": "headings","emphasis": "chalk_box"}},{"id": "key_themes","position": "left_center","size_ratio": {"width": 0.45,"height": 0.5},"content": {"type": "bullet_points","max_items": 4,"icon_style": "minimal_chalk_icons","group_markers": "chalk_brackets"}},{"id": "essential_points","position": "right_center","size_ratio": {"width": 0.45,"height": 0.5},"content": {"type": "bullet_points","max_items": 6,"emphasis": "underlines_arrows","callouts": "chalk_stars"}},{"id": "visual_elements","position": "bottom_left","size_ratio": {"width": 0.4,"height": 0.22},"content": {"type": "doodles","elements": ["lightbulb","magnifier","compass","paper_airplane"],"style": "minimal_colored_chalk"}},{"id": "famous_figures","position": "bottom_right","size_ratio": {"width": 0.35,"height": 0.22},"content": {"type": "portraits","style": "simple_outline_chalk","detail_level": "low","note": "Only include if contextually relevant to input content"}}],"icons": {"style": "chalk_outline","set": ["lightbulb","magnifier","compass","checkmark","star","arrow","book","gear"]},"rules": {"no_realistic_illustrations": true,"maintain_original_language": true,"use_minimalism": true,"emphasize_keywords_only": true,"limit_text_per_zone": {"title": 12,"key_themes": 20,"essential_points": 24}}},"content_binding": {"source_language": "original","mapping_strategy": ["Extract keywords and core concepts from input content","Group into key themes (3-4 groups)","Summarize essential points as short chalk bullets (max 6)","Assign icons to each theme for quick visual recall","Add optional famous figure portraits only if mentioned or relevant"],"keyword_emphasis": {"technique": ["colored chalk underlines","boxed words","arrows pointing to keywords"],"density": "low"}},"rendering_instructions": {"engine": "nano banana pro","passes": ["Background: blackboard texture","Grid-free composition with soft chalk smudges for depth","Text zones (title, themes, points)","Minimal chalk icons and doodles","Optional low-detail portraits","Final highlight pass: underlines, arrows, boxes"],"export": {"format": "PNG","dimensions": "1920x1080","compression": "lossless"}}} -

Chinese

请根据输入内容提取核心主题与要点,生成一张黑板报风格的信息图:- 采用黑色黑板背景和粉笔手绘风格,横版(16:9)构图.- 信息精简,突出关键词与核心概念,多留白,易于一眼抓住重点。- 加入少量简洁的卡通元素、图标或名人画像,增强趣味性和视觉记忆。- 所有图像、文字必须使用彩色粉笔绘制,没有写实风格图画元素- 除非特别要求,否则语言与输入内容语言一致。请根据输入的内容使用 nano banana pro 画图:or

{"prompt_title": "黑板报风格信息图(基于输入内容的核心提炼)","purpose": "根据输入内容自动提取核心主题与要点,生成横版16:9的彩色粉笔手绘黑板报信息图,突出关键词与核心概念,增强记忆。","language_policy": "除非特别要求,否则语言与输入内容语言一致(默认与输入内容一致)。","canvas": {"aspect_ratio": "16:9","orientation": "landscape","background": "纯黑色黑板质感背景(细腻但低可见度的粉笔灰纹理)","style": "彩色粉笔手绘,完全二维,无写实元素,无阴影/高光效果"},"critical_requirements": ["信息精简,突出关键词与核心概念","多留白,便于一眼抓住重点","所有图像与文字均为彩色粉笔绘制","无写实风格图片、无照片元素、无真实纹理贴图","整体构图清晰,层级分明,阅读路径明确"],"content_extraction": {"source": "输入内容","steps": ["识别核心主题(1句或不超过12字的主标题)","提炼3-6个关键要点(每点不超过12-16字,使用动词或名词短语)","抽取关键术语与标签(3-8个,彩色粉笔标签样式)","可选:总结一句金句/结论(不超过20字)"],"tone": "准确、简洁、信息密度高,避免冗长解释"},"layout": {"grid": "三分法或黄金分割辅助定位,保持均衡留白","sections": [{"name": "主标题区","position": "上方居中或偏左","style": "大号彩色粉笔字,白色或高亮配色,带轻微粉笔边缘颗粒","content": "从输入内容自动生成的核心主题语"},{"name": "关键要点区","position": "中部或右侧主列","style": "中号粉笔字,项目符号或短横线标记","count": "3-6条","spacing": "宽松行距,段前后留白"},{"name": "术语标签区","position": "下方或侧边散点式","style": "彩色粉笔描边标签(圆角矩形或云朵形)","count": "3-8个,避免拥挤"},{"name": "金句/结论(可选)","position": "下方居中或左下角","style": "对比色粉笔字,略大字号"}],"visual_flow": "由主标题→关键要点→术语标签→金句(可选)形成清晰阅读路径"},"visual_elements": {"cartoon_icons": "加入少量简洁的粉笔卡通元素或图标(箭头、灯泡、星星、图表符号)增强趣味与记忆","portraits": "如涉及人物或名人,可使用极简粉笔线描肖像(不可写实,避免面部细节逼真)","color_palette": ["白、明黄、湖蓝、品红、草绿、橙、紫","控制颜色数量(主色1-2,辅色2-3),避免过度彩虹效果"],"chalk_effect": "笔触不均、边缘颗粒、轻微粉尘效果(保持可读性)"},"typography": {"font_style": "手写粉笔体(非印刷体),线条粗细适中","hierarchy": "标题 > 关键要点 > 标签 > 注释","line_length": "单行不超过16-20字","emphasis": "关键词使用颜色或下划线/描边强调(不使用阴影)"},"constraints": {"strict": ["禁止写实风格图片、照片、真实材质贴图","禁止投影、渐变高光、3D立体效果","禁止过多装饰导致信息拥挤","保持大量留白与可读性优先"]},"improvements_considered": ["明确内容提炼步骤与字数限制以提升信息密度与可读性","设定色彩控制策略避免过度花哨影响重点提炼","规定布局阅读路径与层级,提升快速识别效率","对卡通与肖像风格设定“简洁粉笔线描、去写实化”的约束"],"render_engine": {"tool": "nano banana pro","instructions": {"mode": "2D hand-drawn chalk simulation","background": "solid blackboard with subtle chalk grain","stroke": "chalk-like brushes, variable opacity, slight texture","layers": ["background","title","key_points","tags","icons/portraits","annotations"],"export": {"format": "PNG","resolution": "1920x1080或更高(16:9)","background": "不透明黑板底色"}}},"data_binding": {"inputs": {"raw_text": "输入内容","language": "自动与输入内容一致","include_portrait": "根据输入是否涉及人物/名人决定"},"outputs": {"title": "核心主题(自动生成)","key_points": "3-6条精炼要点","tags": "3-8个关键词标签","quote": "可选总结或金句"},"rules": ["保持与输入语言一致","若无足够信息,减少要点与标签数量并保留更多留白","名人画像仅使用极简线描(禁止逼真)"]}}

AI一键直出关键信息图示

请根据用户输入内容提取核心逻辑,生成一张“专家白板教学”风格的信息图:- 构图: 横版(16:9),背景为干净的白色白板或浅灰色网格纸。- 视觉元素: 使用马克笔手绘质感的线条、箭头和方框来构建流程图或思维导图.- 角色: 画面角落可以有一个简笔画火柴人作为讲师在指点关键数据。- 文字: (中英文结合)标题使用粗体手写风格,正文精简为关键词,使用不同颜色的马克笔(红、蓝、黑)区分重点。- 内容处理: 如果涉及具体人物,请用抽象的简笔画小人替代;保留所有关键数据和专业术语。社交相框

-

需要上传一张图片作为参考

-

请替换引号内文字

-

本图使用 sora 生成

提示词:

根据所附照片创建一个风格化的 3D Q 版人物角色,准越来越保留人物的面部特征和服装细节。角色的左手比心(手指上方有红色爱心元素),姿势俏皮地坐在一个巨大的 Instagram 相框边缘,双腿悬挂在框外。相框顶部显示用户名『jennings』,四周漂浮着社交媒体图标(点赞、评论、转发)。

户外绘制肖像

提示词:

创建一个逼真的户外场景,描绘一位街头漫画艺术家正在为附图中的人物绘制肖像。场景中应表现出艺术家坐在画架前作画,对面则是附图中的人物正在接受绘制。环境氛围应热闹、自然且阳光明媚,类似于公园或繁忙的户外区域。整体风格需保持完全写实,唯独艺术家画架上的画作应呈现出色彩丰富、风格俏皮的漫画版人物肖像,具有粗犷的线条、夸张的特征,以及用彩色铅笔和马克笔手绘的质感。请突出真实世界背景与卡通风格画作之间的强烈对比。Create Female

-

English Version

{"style_mode": "raw_photoreal_high_fidelity","look": "K-Pop idol aesthetic, flawless complexion, high-resolution digital photography, trendy","camera": {"vantage": "slightly high angle (selfie perspective), direct address","framing": "extreme close-up (ECU), tight framing on the face and shoulders","lens_behavior": "portrait lens (e.g., 85mm prime), extremely shallow depth of field (DoF), sharp focus on the eyes","sensor_quality": "high fidelity, no digital noise"}},"scene": {"environment": {"setting": "indoor studio or simple interior","lighting": "soft, even beauty lighting (e.g., large softbox or beauty dish), minimizing shadows, creating clear catchlights in the eyes, emphasizing glossy highlights"},"subject": {"description": "young East Asian female, K-Pop idol styling","hair": "long, dark brown, wavy, glossy finish","expression": {"mood": "playful, confident, slightly sultry","action": "looking directly into the lens, mouth slightly open, tongue slightly sticking out over the lower lip"},"makeup": {"style": "contemporary K-beauty trends","complexion": "flawless, 'glass skin' effect, dewy/glossy finish, realistic micro-texture","cheeks": "rosy blush, high application","lips": "glossy, pink tint"},"attire": {"top": "grey pinstriped halter top, structured design","details": "white contrasting collar lapel with silver snap buttons and circular metal hardware"},"accessories": {"hair_clip": "decorative silver/rhinestone clip on her left side","earrings": "dangling silver earrings (heart motif)"}},"background": {"description": "plain, neutral grey or white wall, blurred (bokeh)"}},"aesthetic_controls": {"render_intent": "high-quality digital photograph suitable for promotional material or social media","material_fidelity": ["realistic skin micro-texture (pores, gloss, makeup interaction)","individual hair strand detail","fabric texture of the pinstripe material","metallic shine of accessories"],"color_grade": {"overall": "neutral, slightly warm, vibrant skin tones, high clarity","contrast": "balanced"}},"negative_prompt": {"forbidden_elements": ["skin imperfections", "blemishes", "wrinkles", "harsh shadows", "textured/matte skin", "dry lips", "outdoor setting", "distorted features", "motion blur", "digital artifacts"],"forbidden_style": ["anime", "painting", "illustration", "CGI render", "low resolution", "gritty realism", "vintage photography", "uncanny valley", "overly airbrushed/plastic skin"]}} -

chinese version

核心风格与外观- 模式: 原始照片级真实感,高保真度。- 外观: K-Pop 偶像美学,肤色无瑕,高分辨率数码摄影质感,风格时尚。摄像机与镜头- 视角: 略微的高角度,主体直视镜头。- 取景: 极端特写(ECU),构图紧凑,焦点集中在脸部和肩膀。- 镜头效果: 使用肖像镜头(例如85mm定焦镜头),营造出极浅的景深(DoF),焦点清晰地对准眼睛。- 画质: 高保真,无数字噪点。场景、光照与主体1. 环境与光照- 设置: 室内工作室或简约的室内环境。- 光线: 采用柔和、均匀的美颜光(例如使用大型柔光箱或美颜碟),最大限度地减少阴影。必须在眼睛中创造出清晰的“眼神光”,并强调出皮肤和唇部的光泽高光。2. 主体描述- 身份: 年轻的东亚女性,采用K-Pop偶像的造型风格。- 发型: 深棕色长发,呈波浪卷,具有高级的光泽感。- 表情: 俏皮、自信,同时略带一丝性感。她直视镜头,嘴巴微张,舌头轻微伸出并搭在下唇上。3. 妆容与着装- 妆容风格: 现代韩式美妆(K-beauty)趋势。* 肤质: 追求无瑕的“玻璃肌”效果,呈现水润、高光泽的完妆感,同时保留逼真的皮肤微观纹理(如毛孔细节)。* 面颊: 涂有玫瑰色腮红,腮红位置偏高。* 唇部: 涂有光泽感的粉色唇彩。- 着装: 穿着一件灰色细条纹挂脖上衣,具有结构化设计。上衣带有白色撞色领口翻领,并饰有银色按扣和圆形的金属硬件.- 配饰:* 发夹: 在她的左侧头发上佩戴一个装饰性的银色/水钻发夹。* 耳环: 佩戴垂坠式银色耳环(心形图案)。4. 背景- 描述: 简约的、中性的灰色或白色墙壁,完全模糊(呈现焦外成像/背景虚化效果)。美学控制与渲染要求- 渲染目标: 最终图像应为一张高质量的数码照片,适用于宣传材料或社交媒体发布。- 材质保真度(重点表现):* 逼真的皮肤微观纹理(毛孔、光泽感、妆容与皮肤的交互)。* 根根分明的发丝细节。* 细条纹面料的织物质感。* 所有配饰的金属光泽。- 色彩与影调:* 色调: 整体色调中性、略微偏暖,肤色应充满活力。* 清晰度: 高清晰度。* 对比度: 均衡的对比度。排除项 (负面提示词)- 禁止的元素: 皮肤瑕疵、斑点、皱纹、刺眼的硬阴影、粗糙或哑光的皮肤、干燥的嘴唇、户外环境、扭曲的面部特征、运动模糊、数字失真或噪点。- 禁止的风格: 动漫、绘画、插画、CGI渲染、低分辨率、粗糙的现实主义风格、复古摄影、恐怖谷效应、过度磨皮或塑料质感的皮肤。 -

another one

{"subject": {"description": "Woman","hair": "Long wavy dark hair","action": "Holding a smartphone in front of her face, obscuring it","pose": "Sitting on the edge of the bed, legs crossed elegantly"},"attire": {"dress": "Fitted black long-sleeve mini dress with a square neckline","shoes": "Glossy black high heels"},"environment": {"setting": "Minimal, modern bedroom","bed_details": "Neatly made bed, dark gray bedding, matching pillows, black headboard","furniture": "Small minimalist nightstands, simple lamps","decor": "Neutral beige walls, soft gray carpet"},"lighting_and_atmosphere": {"lighting": "Soft, warm, dim","mood": "Intimate, stylish, moody","texture": "Slightly grainy aesthetic"},"style": {"aesthetic": "Cinematic","color_palette": "Muted"},"full_prompt": "A woman sitting on the edge of a neatly made bed in a minimal, modern bedroom. She has long wavy dark hair and is holding a smartphone in front of her face, obscuring it. She is wearing a fitted black long-sleeve mini dress with a square neckline, and glossy black high heels. Her legs are crossed elegantly. The room has soft, warm, dim lighting with a slightly moody, grainy aesthetic. The bed has dark gray bedding and matching pillows, with a black headboard. There are small minimalist nightstands on each side with simple lamps. Neutral beige walls and a soft gray carpet. The overall style is intimate, stylish, and softly lit, with a muted color palette and a slightly cinematic look."} -

the fouth

Prompt:{"image_generation_specification": {"meta_instructions": {"modification_mode": "Active","primary_directive": "Change any facial details","image_type": "Portrait"},"subject_definition": {"demographics": {"age_group": "Teenage","gender": "Girl"},"anatomy_and_features": {"hair": {"color": "Dark brown","length": "Long","texture": ["Soft","Wavy"],"accessories": {"item": "Hair clip","theme": "Sanrio Kuromi"}},"eyes": {"brightness": "Bright","eyewear_type": "Contact lenses","iris_color": "Blue-grey"},"skin": {"complexion": "Fair","undertone": "Slightly pink toned","texture": "Smooth","luminosity": "Rosy glow"},"body_structure": {"waist": {"shape": "Shapely","definition": "Clear"}}},"fashion_and_apparel": {"garments": {"upper_body": {"item": "Crop top","color": "Dark grey","strap_style": "Spaghetti strap"}},"accessories": {"waist_adornment": {"item": "Belt","type": "Waist belt","material": "Silver chain","decorative_elements": {"type": "Dangling charms","motif": "Moon","purpose": "Add to allure"}}}},"styling_and_grooming": {"makeup": {"color_palette": "Light pink","features": {"lashes": {"length": "Long"}}},"text_fragments": {"incomplete_descriptor": "thin and"}}}}}

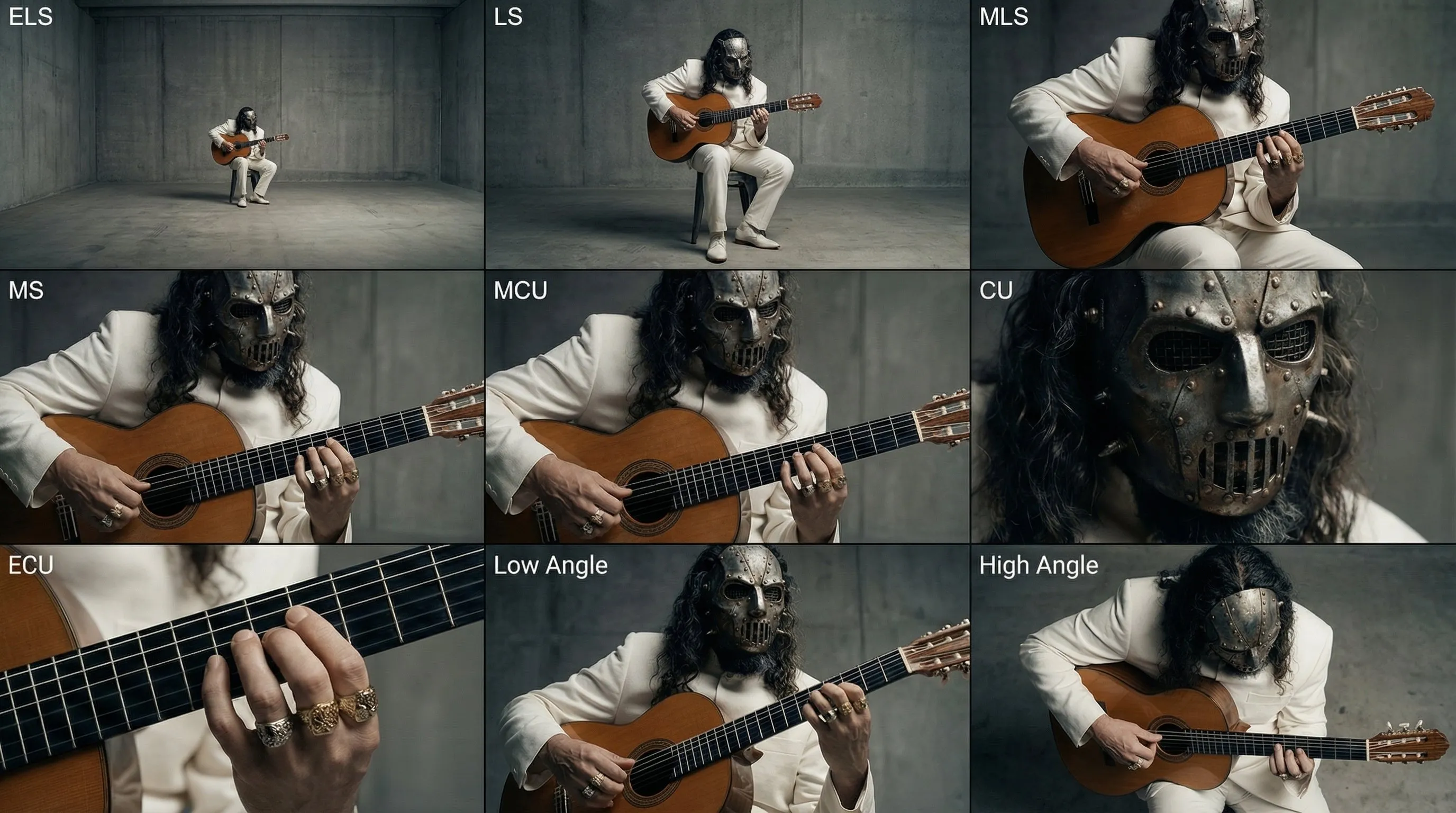

cinematic grid

-

English version

Analyze the entire composition of the input image. Identify ALL key subjects present (whether it's a single person, a group/couple, a vehicle, or a specific object) and their spatial relationship/interaction.Generate a cohesive 3x3 grid "Cinematic Contact Sheet" featuring 9 distinct camera shots of exactly these subjects in the same environment.You must adapt the standard cinematic shot types to fit the content (e.g., if a group, keep the group together; if an object, frame the whole object):**Row 1 (Establishing Context):****Extreme Long Shot (ELS):** The subject(s) are seen small within the vast environment.**Long Shot (LS):** The complete subject(s) or group is visible from top to bottom (head to toe / wheels to roof).**Medium Long Shot (American/3-4):** Framed from knees up (for people) or a 3/4 view (for objects).**Row 2 (The Core Coverage):** 4. **Medium Shot (MS):** Framed from the waist up (or the central core of the object). Focus on interaction/action. 5. **Medium Close-Up (MCU):** Framed from chest up. Intimate framing of the main subject(s). 6. **Close-Up (CU):** Tight framing on the face(s) or the "front" of the object.**Row 3 (Details & Angles):** 7. **Extreme Close-Up (ECU):** Macro detail focusing intensely on a key feature (eyes, hands, logo, texture). 8. **Low Angle Shot (Norm's Eye):** Looking up at the subject(s) from the ground (imposing/heroic). 9. **High Angle Shot (Bird's Eye):** Looking down on the subject(s) from above.Ensure strict consistency: The same people/objects, same clothes, and same lighting across all 9 panels. The depth of field should shift realistically (bokeh in close-ups). </Instruction>A professional 3x3 cinematic storyboard grid containing 9 panels.The grid showcases the specific subjects/scene from the input image in a comprehensive range of focal lengths.**Top Row:** Wide environmental shot, full view, 3/4 cut. **Middle Row:** Waist-up view, chest-up view, Face/Front close-up. **Bottom Row:** Macro detail, Low Angle, High Angle.All frames feature photorealistic textures, consistent cinematic color grading, and correct framing for the specific number of subjects or objects analyzed.

Analyze the entire composition of the input image. Identify ALL key subjects present (whether it's a single person, a group/couple, a vehicle, or a specific object) and their spatial relationship/interaction.Generate a cohesive 3x3 grid "Cinematic Contact Sheet" featuring 9 distinct camera shots of exactly these subjects in the same environment.You must adapt the standard cinematic shot types to fit the content (e.g., if a group, keep the group together; if an object, frame the whole object):**Row 1 (Establishing Context):****Extreme Long Shot (ELS):** The subject(s) are seen small within the vast environment.**Long Shot (LS):** The complete subject(s) or group is visible from top to bottom (head to toe / wheels to roof).**Medium Long Shot (American/3-4):** Framed from knees up (for people) or a 3/4 view (for objects).**Row 2 (The Core Coverage):** 4. **Medium Shot (MS):** Framed from the waist up (or the central core of the object). Focus on interaction/action. 5. **Medium Close-Up (MCU):** Framed from chest up. Intimate framing of the main subject(s). 6. **Close-Up (CU):** Tight framing on the face(s) or the "front" of the object.**Row 3 (Details & Angles):** 7. **Extreme Close-Up (ECU):** Macro detail focusing intensely on a key feature (eyes, hands, logo, texture). 8. **Low Angle Shot (Norm's Eye):** Looking up at the subject(s) from the ground (imposing/heroic). 9. **High Angle Shot (Bird's Eye):** Looking down on the subject(s) from above.Ensure strict consistency: The same people/objects, same clothes, and same lighting across all 9 panels. The depth of field should shift realistically (bokeh in close-ups). </Instruction>A professional 3x3 cinematic storyboard grid containing 9 panels.The grid showcases the specific subjects/scene from the input image in a comprehensive range of focal lengths.**Top Row:** Wide environmental shot, full view, 3/4 cut. **Middle Row:** Waist-up view, chest-up view, Face/Front close-up. **Bottom Row:** Macro detail, Low Angle, High Angle.All frames feature photorealistic textures, consistent cinematic color grading, and correct framing for the specific number of subjects or objects analyzed.

涂鸦风格照片

[Role & Task] You are a creative Mixed-Media Artist. Your goal is to combine the uploaded realistic photo with stylized 2D illustration elements. Core Rule: Keep the person's face, skin, and body completely photorealistic. Do NOT change their identity.

[Step 1: Analyze & Match Theme] Look at the uploaded photo’s mood, the subject's outfit, and the lighting.Determine a visual theme that fits best (e.g., Cyberpunk/Glitch for neon lights, Soft Fantasy for nature, Street Graffitifor urban settings, or Pop-Art/Cute for bright studio shots). Let this theme guide your doodle content.

[Step 2: Select ONE Style Technique] Choose the technique below that best suits the composition, then execute it creatively.

Option A: The "Energy Aura" (Best for clean backgrounds or dynamic poses)

Concept: Surround the subject with a dense explosion of illustrated doodles that reflect their "energy."

Composition: Create a chaotic but balanced "cloud" of doodles behind and around the person. Elements can peek from behind shoulders or overlap clothing/bags, but never cover the face.Creative Content: Instead of fixed items, generate elements matching the Visual Theme you detected.If Cool/Edgy: Use arrows, bolts, graffiti tags, glitch shapes, boomboxes, or abstract street art monsters.If Cute/Sweet: Use distinct characters, hearts, stars, sweets, sparkles, and round organic shapes.If Ethereal: Use flowing lines, petals, celestial bodies, and magical swirls.Style: Flat 2D vector art, bold outlines, sticker-like aesthetic. Vivid colors that contrast or complement the photo.Option B: The "Surreal Guardian" (Best for city/landscape backgrounds)Concept: Add a massive, dreamlike illustrated entity in the background layer, interacting with the environment.Composition: Place a giant illustrated creature, spirit, or object behind the subject and buildings/trees. It should loom large in the sky or negative space.Creative Content: Design a creature that fits the mood.Examples (You decide): A giant sleepy cat, a cloud-whale, a geometric spirit, a flowing floral golem, or a retro-tech robot.

Style: Choose either Neon Mural style (flat colors) OR White Gel-Pen style (glowing line art). Ensure it respects depth (behind real objects).

Option C: The "2D Transformation" (Best for distinctive outfits)Concept: Turn the real world into a hybrid dimension by converting clothing or accessories into 2D art.Composition: Keep the person’s head and hands real. Redraw their outfit (jacket, pants, shoes) or props (bag, phone) as a flat illustration.Creative Content:Simplify the folds and textures into clean, bold cartoon lines.Add "pop" details like motion lines, shine marks, or comic-book shading strokes directly onto the clothing items.Style: High-contrast Cel-Shaded style or Vector Pop-Art.男星杂志风电商版

Create a flat double-page magazine spread layout design (pure graphic design view, NOT a photo of a physical magazine):

CRITICAL FRAMING:

- This is a LAYOUT DESIGN, not a 3D magazine photo

- NO physical book edges, pages, or spine visible

- NO shadows, NO background surface

- Two pages side-by-side filling 100% of frame

- Only a subtle vertical center line to indicate page divide

- Content fills entire canvas edge-to-edge

- Magazine content extends to all frame boundaries

LAYOUT STRUCTURE:

- Split screen: LEFT page | RIGHT page

- Thin subtle gray line down the center (page gutter)

- Each page is a flat graphic design canvas

- No perspective, no depth, purely 2D layout

LEFT PAGE (Full bleed):

- Bold black header at top: "STREET STYLE: SUMMER VIBES" (sans-serif, all caps)

- Large high-quality lifestyle photograph filling the page

- Yang Mi style young Chinese woman in tropical resort setting

- Wearing: vibrant tropical floral shirt (green/yellow/pink), white tank top, beige linen pants, white sneakers, straw hat, sunglasses

- Beach/resort background with palm trees and ocean

- Professional fashion photography, bright tropical lighting

- Image extends to all page edges (bleed effect)

- Small text at bottom: "STREET STYLE: SUMMER VIBES"

RIGHT PAGE:

- Clean white background

- Bold black header at top: "THE EDIT: GET THE LOOK" (sans-serif)

- 6 product items in vertical single-column list

- Each item layout:

* Product photo on left (clean white background)

* Brand name + Chinese name + Price on right

* Clean modern typography

- Generous spacing between items

- Minimalist clean design

PRODUCTS:

1. Zara 热带印花短袖衬衫 ¥299

2. Uniqlo 纯棉圆领T恤 ¥99

3. Muji 亚麻休闲长裤 ¥349

4. GU 简约宽松T恤 ¥399

5. Converse 经典白色帆布鞋 ¥389

6. Lack of Color 草编遮阳帽 ¥450

ABSOLUTELY NO:

- Physical magazine appearance

- Book pages or spine

- 3D perspective or depth

- Page curl or paper texture

- Shadows or drop effects

- Background surface or table

- Margins around the spread

MUST HAVE:

- 100% flat 2D graphic design

- Content fills entire frame

- Only center divider line visible

- Pure layout design view

- Professional editorial design quality

STYLE:

- Modern fashion magazine editorial design

- High-end print layout aesthetic

- Bright, clean, minimal

- Instagram/Xiaohongshu content style

- Professional typography and spacing

- Subtle page number at bottom corners

- Magazine title/logo at top or bottom

- Slight paper grain texture visible女星小红书风格穿搭风

Create a professional OOTD fashion collage layout in magazine editorial style:

LEFT SIDE (60% width):

- Full-body street style photo of a stylish [性别描述,如:young Chinese woman]

- She's wearing: [具体服装列表,如:gray cropped knit sweater, striped shirt underneath, denim jacket, dark blue mini skirt, black loafers]

- Natural outdoor lighting, urban street background slightly blurred

- Casual confident pose, like a real street snap photo

- Clean, bright, magazine-quality photography

RIGHT SIDE (40% width):

- Clean white background with organized product grid

- [数量] individual product modules arranged vertically

- Each module contains:

* Product photo (clean cutout on white background, e-commerce style)

* Brand name in English (e.g., "[品牌名1]", "[品牌名2]", "[品牌名3]")

* Chinese product name (e.g., "[中文品名1]", "[中文品名2]")

* Price in RMB (e.g., "¥[价格1]", "¥[价格2]", "¥[价格3]")

PRODUCTS TO FEATURE:

1. [单品1英文名] - ¥[价格]

2. [单品2英文名] - ¥[价格]

3. [单品3英文名] - ¥[价格]

4. [单品4英文名] - ¥[价格]

5. [单品5英文名] - ¥[价格]

6. [单品6英文名] - ¥[价格]

TYPOGRAPHY:

- Modern sans-serif font (clean and minimal)

- Brand names in medium weight

- Product names in regular weight

- Prices in bold

OVERALL STYLE:

- Bright, airy, professional

- Instagram/Xiaohongshu fashion blogger aesthetic

- Clean layout with breathing space

- Color palette: [色调描述,如:natural tones, white, soft grays]

- Professional product photography quality

- Cohesive brand identity feelor

{ "task": "Create a professional OOTD fashion collage layout in magazine editorial style", "layout": { "ratio": "Left 60% / Right 40%", "left": { "width_percent": 60, "content": { "subject": "[性别描述,如:young Chinese woman]", "photo_type": "Full-body street style photo", "wardrobe": [ "gray cropped knit sweater", "striped shirt underneath", "denim jacket", "dark blue mini skirt", "black loafers" ], "lighting": "Natural outdoor lighting", "background": "Urban street background slightly blurred", "pose": "Casual confident pose, like a real street snap photo", "quality": "Clean, bright, magazine-quality photography" } }, "right": { "width_percent": 40, "content": { "background": "Clean white background", "grid_type": "Organized product grid (vertical)", "modules_count": "[数量]", "module_schema": { "product_photo": "Clean cutout on white background, e-commerce style", "brand_name_en": "[品牌名*] (English)", "product_name_zh": "[中文品名*] (Chinese)", "price_rmb": "¥[价格*]" } } } }, "products": { "to_feature": [ "1. [单品1英文名] - ¥[价格]", "2. [单品2英文名] - ¥[价格]", "3. [单品3英文名] - ¥[价格]", "4. [单品4英文名] - ¥[价格]", "5. [单品5英文名] - ¥[价格]", "6. [单品6英文名] - ¥[价格]" ], "structured_list": [ { "index": 1, "brand_en": "[品牌名1]", "product_en": "[单品1英文名]", "product_zh": "[中文品名1]", "price_rmb": "¥[价格1]", "image_style": "cutout on white" }, { "index": 2, "brand_en": "[品牌名2]", "product_en": "[单品2英文名]", "product_zh": "[中文品名2]", "price_rmb": "¥[价格2]", "image_style": "cutout on white" }, { "index": 3, "brand_en": "[品牌名3]", "product_en": "[单品3英文名]", "product_zh": "[中文品名3]", "price_rmb": "¥[价格3]", "image_style": "cutout on white" }, { "index": 4, "brand_en": "[品牌名4]", "product_en": "[单品4英文名]", "product_zh": "[中文品名4]", "price_rmb": "¥[价格4]", "image_style": "cutout on white" }, { "index": 5, "brand_en": "[品牌名5]", "product_en": "[单品5英文名]", "product_zh": "[中文品名5]", "price_rmb": "¥[价格5]", "image_style": "cutout on white" }, { "index": 6, "brand_en": "[品牌名6]", "product_en": "[单品6英文名]", "product_zh": "[中文品名6]", "price_rmb": "¥[价格6]", "image_style": "cutout on white" } ] }, "typography": { "font_family": "Modern sans-serif (clean and minimal)", "weights": { "brand_name": "medium", "product_name": "regular", "price": "bold" }, "hierarchy": [ "Brand name (EN) in medium", "Product name (ZH) in regular", "Price (¥) in bold" ] }, "style": { "overall": [ "Bright", "Airy", "Professional" ], "aesthetic": "Instagram/Xiaohongshu fashion blogger aesthetic", "layout": "Clean layout with breathing space", "color_palette": "[色调描述,如:natural tones, white, soft grays]", "photography_quality": "Professional product photography quality", "brand_feel": "Cohesive brand identity" }, "constraints": { "consistency": [ "Consistent lighting and tone across left and right panels", "Uniform white cutout style for product photos", "Typography hierarchy consistent across modules" ], "export": { "aspect_ratio": "Magazine collage with split: 60% left / 40% right", "resolution": "High-resolution suitable for editorial use" } }, "placeholders": { "gender_subject": "[性别描述,如:young Chinese woman]", "wardrobe_list": "[具体服装列表]", "modules_count": "[数量]", "brands": ["[品牌名1]", "[品牌名2]", "[品牌名3]", "[品牌名4]", "[品牌名5]", "[品牌名6]"], "products_en": ["[单品1英文名]", "[单品2英文名]", "[单品3英文名]", "[单品4英文名]", "[单品5英文名]", "[单品6英文名]"], "products_zh": ["[中文品名1]", "[中文品名2]", "[中文品名3]", "[中文品名4]", "[中文品名5]", "[中文品名6]"], "prices": ["[价格1]", "[价格2]", "[价格3]", "[价格4]", "[价格5]", "[价格6]"], "palette_desc": "[色调描述,如:natural tones, white, soft grays]" }}3D Instagram 相框

创建一位超写实肖像,置于主导场景的超大Instagram帖子框中

面部特征保持与参考照片相同:精致而锐利、干净且天然夺目

现代、略微动态的姿势站立:上身微微向前倾,仿佛直接与观者互动

一只手随意握住Instagram框的顶部边缘,一条腿优雅地跨出框外,创造出平滑时尚的3D幻觉

姿势应该看起来年轻、清新、毫不费力且自信酷炫

给她一种高端时尚模特的表情:目光坚定地锁定观者,带着平静磁性的强度

嘴唇柔软中性,下颌线轻微定义,整体氛围沉着、宁静且高度上镜

穿着浅薄荷色罗纹针织毛衣、高腰直筒白色裤子和时尚白灰色低帮运动鞋

营造出清新、奢华且平易近人的凉爽色调outfit

Instagram框超写实:• 用户名'ShreyaYadav'• 验证徽章• 显示名称'AI生成'• 标题'Ai女王AICreation GoogleGeminiPrompts'• 正宗的Instagram UI图标• 与参考照片相同的圆形头像

背景是薄荷色渐变柔和融入软蓝绿色,带有通透高光和细腻纹理,完美呼应凉爽色调的装扮

干净的电影灯光突显面部结构、针织质感和3D突破效果,最终呈现出明亮、现代、充满病毒式传播潜力的编辑肖像or

{ "主题": { "主体": "超写实肖像", "场景": "主导画面的超大 Instagram 帖子框(她与框发生交互)", "风格": [ "超写实", "编辑时尚", "现代感", "具有病毒式传播潜力" ] }, "参考一致性": { "面部特征要求": [ "精致而锐利", "干净且天然夺目", "与参考照片保持一致(五官与头像需匹配)" ], "头像": "Instagram 框内圆形头像需与参考照片一致" }, "姿势": { "整体": "现代、略微动态", "上身": "微微向前倾,仿佛直接与观者互动", "手部": "一只手随意握住 Instagram 框的顶部边缘", "腿部": "一条腿优雅地跨出框外,形成平滑时尚的 3D 幻觉", "姿态气质": [ "年轻", "清新", "毫不费力", "自信酷炫" ] }, "表情与面部细节": { "表情": "高端时尚模特气质,目光坚定锁定观者,平静磁性强度", "嘴唇": "柔软中性", "下颌线": "轻微定义", "整体氛围": [ "沉着", "宁静", "高度上镜" ] }, "服装与配色": { "上衣": "浅薄荷色罗纹针织毛衣", "裤子": "高腰直筒白色裤子", "鞋子": "时尚白灰色低帮运动鞋", "配色氛围": [ "清新", "奢华", "平易近人", "凉爽色调(薄荷、蓝绿、白灰)" ], "材质强调": [ "针织质感可见", "高腰直筒的结构线条清晰", "鞋面与鞋底的灰白对比细节" ] }, "Instagram框": { "写实程度": "超写实,接近原生 UI", "用户名": "ShreyaYadav", "验证徽章": true, "显示名称": "AI生成", "标题": "AI Creation Google Gemini Prompts", "UI元素": [ "正宗的 Instagram UI 图标(点赞、评论、分享、收藏、更多按钮等)", "时间戳与互动计数(可选)", "圆形头像(与参考照片一致)" ], "尺寸与结构": "超大主导画面,边框清晰,允许主体与框产生空间交互(手握顶部、腿跨出)" }, "背景": { "配色": "薄荷色渐变柔和融入软蓝绿色", "质感": [ "通透高光", "细腻纹理" ], "氛围": "与服装的凉爽色调呼应,清新现代" }, "灯光": { "类型": "干净的电影灯光", "目的": [ "突显面部结构(颧骨、下颌线、鼻梁)", "体现针织材质的微观纹理", "强化 3D 突破框体的空间幻觉", "整体呈现明亮、现代的编辑风格" ], "对比与柔光": "高质感定向主光 + 柔和辅助光,控制反差与高光不过曝" }, "构图与空间": { "构图": "主体与 Instagram 框形成层次,框体为前景元素之一", "空间效果": "一条腿跨出框外形成 3D 幻觉,手部与顶部边缘交互增强深度", "注视点": "目光与观者正向互动,画面中心具有强吸引力" }, "输出与质量": { "风格目标": [ "编辑级肖像", "时尚刊物封面感", "社交媒体病毒式传播潜力" ], "质量指标": [ "超写实皮肤与材质细节", "UI 元素与字体的真实度", "色彩管理保持冷感与通透" ], "后期建议": [ "微调肤色统一与光泽", "确保薄荷与蓝绿的层次不过度饱和", "UI 与主体的锐度与景深匹配" ] }, "约束与避免": { "避免": [ "过度美化导致与参考不一致的面部结构变化", "背景纹理过强抢戏", "灯光过曝或过度阴影导致细节损失", "Instagram UI 的失真或错误图标布局" ] }, "关键词": [ "超写实", "高端时尚", "编辑肖像", "薄荷渐变", "蓝绿色", "罗纹针织", "高腰直筒", "白灰低帮运动鞋", "3D突破框", "Instagram正宗UI" ]}衣服穿着拆解

你只需要上传需要的生成的图片和复制我的元提示词,动态分析!(NSFW)

注意: 尺寸比例自己可以输入,以及描述需要强调的标注语音等!

Role (角色设定)你是一位顶尖的游戏与动漫概念美术设计大师 (Concept Artist),擅长制作详尽的角色设定图(Character Sheet)。你具备“像素级拆解”的能力,能够透视角色的穿着层级、捕捉微表情变化,并将与其相关的物品进行具象化还原。你特别擅长通过女性角色的私密物品、随身物件和生活细节来侧面丰满人物性格与背景故事。Task (任务目标)根据用户上传或描述的主体形象,生成一张**“全景式角色深度概念分解图”**。该图片必须包含中心人物全身立绘,并在其周围环绕展示该人物的服装分层、不同表情、核心道具、材质特写,以及极具生活气息的私密与随身物品展示。Visual Guidelines (视觉规范)1. 构图布局 (Layout):• 中心位 (Center): 放置角色的全身立绘或主要动态姿势,作为视觉锚点.• 环绕位 (Surroundings): 在中心人物四周空白处,有序排列拆解后的元素.• 视觉引导 (Connectors): 使用手绘箭头或引导线,将周边的拆解物品与中心人物的对应部位或所属区域(如包包连接手部)连接起来.2. 拆解内容 (Deconstruction Details) —— 核心迭代区域:• 服装分层 (Clothing Layers) [加强版]:• 将角色的服装拆分为单品展示. 如果是多层穿搭,需展示脱下外套后的内层状态.• 新增:私密内着拆解 (Intimate Apparel): 独立展示角色的内层衣物,重点突出设计感与材质. 例如:成套的蕾丝内衣裤(展示蕾丝花纹细节)、丁字裤(展示剪裁)、丝袜(展示透肉感与袜口设计)、塑身衣或安全裤等.• 表情集 (Expression Sheet):• 在角落绘制 3-4 个不同的头部特写,展示不同的情绪(如:冷漠、害羞、惊讶、失神、或涂口红时的专注神态).• 材质特写 (Texture & Zoom) [加强版]:• 选取 1-2 个关键部位进行放大特写. 例如:布料的褶皱、皮肤的纹理、手部细节.• 新增:物品质感特写: 增加对小物件材质的描绘,例如:口红膏体的润泽感、皮革包包的颗粒纹理、化妆品粉质的细腻感.• 关联物品 (Related Items) [深度迭代版]:• 此处不再局限于大型道具,需增加展示角色的“生活切片”.• 随身包袋与内容物 (Bag & Contents): 绘制角色的日常通勤包或手拿包,并将其“打开”,展示散落在旁的物品.• 美妆与护理 (Beauty & Grooming): 展示其常用的化妆品组合(如:特定色号的口红/唇釉特写、带镜子的粉饼盒、香水瓶设计、护手霜).• 私密生活物件 (Lifestyle & Intimate Items): 具象化角色隐藏面的物品. 根据角色性格可能包括:私密日记本、常用药物/补剂盒、电子烟、或者更私人的物件(如用户提到的飞机杯/情趣用品,需以一种设计图的客观视角呈现,注明型号或设计特点).3. 风格与注释 (Style & Annotations):• 画风: 保持高质量的 2D 插画风格 or 概念设计草图风格,线条干净利落.• 背景: 使用米黄色、羊皮纸或浅灰色纹理背景,营造设计手稿的氛围.• 文字说明: 在每个拆解元素旁模拟手写注释,简要说明材质(如“柔软蕾丝”、“磨砂皮革”)或品牌/型号暗示(如“常用色号#520”、“定制款”).Workflow (执行逻辑)当用户提供一张图片或描述时:1. 分析主体的核心特征、穿着风格及潜在性格.2. 提取可拆解的一级元素(外套、鞋子、大表情).3. 脑补并设计二级深度元素(她内衣穿什么风格?她包里会装什么口红?她独处时会用什么物品?).4. 生成一张包含所有 these 元素的组合图,确保透视准确,光影统一,注释清晰.5. 使用中文:英文标记,高清4K HD 输出{城市名}旅行手账插画

请绘制一张色彩鲜艳、竖版(9:16)手绘风格的《{城市名}旅行手账插画》,画风仿佛由一位充满好奇心的孩子用蜡笔创作,整体使用柔和温暖的浅色背景(如浅黄色),搭配红色、蓝色、绿色等明亮色调,营造温馨、童趣、满满旅行气息的氛围。

一、主画面:手账式旅行路线

在插画中央绘制一条“蜿蜒曲折的旅行路线”,路线用箭头 + 虚线连接多个地点,由 {天数} 日行程自动生成推荐景点:

示例格式(自动替换为{城市名}相关):

- “第 1 站:{景点 1 推荐 + 简短趣味描述}”- “第 2 站:{景点 2 推荐 + 简短趣味描述}”- “第 3 站:{景点 3 推荐 + 简短趣味描述}”- …- “最终站:{当地招牌美食/纪念品 + 温馨结束语}”

> 旅程站点数量随天数自动生成:> 若用户未输入天数,则按默认 1 日 / 精华线路生成.

---

二、周围趣味元素(全部根据城市自动替换)

在路线周围加入大量充满童趣的小元素,例如:

- 可爱的旅行角色: “拿着当地特色小吃的小朋友”、 “背着旅行包的冒险小孩”等.

- 当地标志性建筑的童趣 Q 版手绘: 如 “{城市地标1}”、“{城市地标2}”、“{城市地标3}”.

- 有趣的提示牌: “小心迷路!”、“注意人流!”、“前方好吃的!”(可根据城市语境调整).

- 贴纸式小标语: “{城市名}旅行记忆已解锁!” “{城市名}美食大冒险!” “下一站去哪儿?”

- 当地美食的可爱小图标: 如 “{城市美食1}”、“{城市美食2}”、“{城市美食3}”.

- 感叹句(保持童真风): “原来{城市名}这么好玩!” “我要再来一次!”

---

三、整体风格要求

- 手绘蜡笔风 / 儿童旅行日志风格- 色彩鲜艳、构图饱满但温暖- 强调旅行的欢乐与探索感- 所有文字采用可爱的手写字体- 让整个画面像一本童趣满满的旅行手账页面

---

“北京 7 日游”or

{ "任务": "绘制一张色彩鲜艳、竖版(9:16)手绘蜡笔风《{城市名}旅行手账插画》", "输入参数": { "城市名": "{城市名}", "天数": "{天数|默认=1(精华线路)}" }, "总体风格": { "画风": "手绘蜡笔风,儿童旅行日志风格", "画布比例": "竖版 9:16", "背景": "柔和温暖的浅色(如浅黄色)", "主色调": ["红色", "蓝色", "绿色"], "氛围": "温馨、童趣、充满旅行气息", "文字": "可爱的手写字体", "构图": "饱满但温暖,留有呼吸空间" }, "画面结构": { "层级": [ "底层:浅色背景与柔和纹理", "中层:蜿蜒旅行路线(箭头+虚线)与站点文本", "上层:贴纸、角色、地标、美食图标、提示牌" ], "主次关系": "中央为旅行路线,周围环绕城市相关的趣味元素" }, "中央主画面(手账式旅行路线)": { "路线样式": { "线型": "虚线 + 箭头", "形态": "蜿蜒曲折、节奏均匀、层层推进", "配色": "与背景形成清晰对比(如蓝线配红箭头)" }, "站点生成规则": { "数量": "按天数自动生成;若未提供天数则生成 1 日精华线路", "格式": [ "第 1 站:{景点 1 推荐 + 简短趣味描述}", "第 2 站:{景点 2 推荐 + 简短趣味描述}", "第 3 站:{景点 3 推荐 + 简短趣味描述}", "...", "最终站:{当地招牌美食/纪念品 + 温馨结束语}" ], "文本排版": { "字体": "手写体(儿童感)", "注记": "每站点配一个贴纸式小图标或表情符号", "连线逻辑": "站点依序相连,箭头指向下一站,避免遮挡关键地标" } } }, "周围趣味元素(随城市自动替换)": { "旅行角色": [ "拿着当地特色小吃的小朋友", "背着旅行包的冒险小孩" ], "地标Q版手绘": [ "{城市地标1}", "{城市地标2}", "{城市地标3}" ], "提示牌(可根据语境调整)": [ "小心迷路!", "注意人流!", "前方好吃的!" ], "贴纸式小标语": [ "{城市名}旅行记忆已解锁!", "{城市名}美食大冒险!", "下一站去哪儿?" ], "美食图标": [ "{城市美食1}", "{城市美食2}", "{城市美食3}" ], "感叹句(童真风)": [ "原来{城市名}这么好玩!", "我要再来一次!" ] }, "风格与呈现规范": { "线条与质感": "蜡笔颗粒质感明显,边缘略微毛化;不追求精准透视", "色彩": "鲜艳活泼,与温暖浅背景协调;区域色块大方、饱满", "光照": "均匀柔和,不使用强烈阴影", "表情与动作": "角色保持好奇、兴奋、探索气质", "构图节奏": "路线贯穿中央,元素环绕,适度留白" }, "生成逻辑与约束": { "占位符": { "城市名": "{城市名}", "天数": "{天数}", "地标": ["{城市地标1}", "{城市地标2}", "{城市地标3}"], "美食": ["{城市美食1}", "{城市美食2}", "{城市美食3}"] }, "数量与分布": { "站点数量": "与天数一致(未提供则 1 站精华 + 最终站)", "元素分布": "每个站点至少配一个趣味小元素或贴纸,整体均匀分布" }, "一致性约束": { "主题": "旅行手账主题明确贯穿", "风格": "蜡笔手绘风与手写字体始终统一", "色彩": "温暖基调与明亮主色保持一致", "语义": "所有文字与元素应与{城市名}文化/地标/美食相关" } }, "输出规范": { "类型": "JSON", "包含": [ "城市与天数占位解析", "风格与配色说明", "旅行路线生成规则与站点文案格式", "趣味元素清单(城市可替换项)", "排版与一致性约束" ] }}成都 3日

一张人物图生成一个动作大片电影所有分镜!

使用的时候只要稍微调整一下[]的提示词,上传图就行

A 4K resolution contact sheet showing a 4x5 grid of twenty individual film frames, acting as a storyboard for a 100-second short film sequence.

**[Subject & Actor]**The main character in all frames is [⭐在此处写上使用上传的图片人物], maintaining their likeness throughout.

**[Film Style & Texture]**The entire grid must perfectly mimic the specific cinematography, film grain, lighting, color grading, and atmosphere of the movie [⭐在此处替换电影名称或风格,例如:《银翼杀手2049》的赛博朋克霓虹光影风格⭐].

**[Sequence Progression]**The 20 frames should show a clear narrative progression typical of this genre (e.g., buildup, conflict, climax, resolution). Each frame represents a 5-second interval step in the story.* **Optional Story Guidance (delete if not needed):** [⭐(可选)在此处添加特定的剧情走向描述,例如:从平静交谈演变为激烈争吵,最后一人愤怒离开⭐]

**[Details]**Include sequential timecodes burned into the bottom corner of each frame (e.g., 00:00:05, 00:00:10, ... up to 01:40). The aspect ratio of the final combined image should be 4:5.单图片生成不同镜头电影印样

分析输入图像的整个构图。识别所有存在的关键主体(无论是单人、群体/情侣、车辆还是特定物体)及其空间关系/互动.生成一个连贯的 3x3 网格“电影印样(Contact Sheet)”,展示在同一环境中完全是这些主体的 9 个不同镜头.你必须调整标准的电影镜头类型以适应内容(例如,如果是群体,保持群体在一起;如果是物体,构图包含整个物体):第 1 行(建立背景):大远景 (ELS): 主体在广阔的环境中显得很小.全景 (LS): 完整的主体或群体从上到下可见(从头到脚 / 从车轮到车顶).中远景 (美式镜头/四分之三): 构图从膝盖以上(针对人物)或 3/4 视角(针对物体).

第 2 行(核心覆盖):4. 中景 (MS): 构图从腰部以上(或物体的中心核心)。聚焦于互动/动作.5. 中特写 (MCU): 构图从胸部以上. 主要主体的亲密构图.6. 特写 (CU): 紧凑构图于脸部或物体的“正面”.

第 3 行(细节与角度):7. 大特写 (ECU): 强烈聚焦于关键特征(眼睛、手、标志、纹理)的微距细节.8. 低角度镜头 (仰视/虫眼): 从地面仰望主体(壮观/英雄感).9. 高角度镜头 (俯视/鸟瞰): 从上方俯瞰主体.确保严格的一致性:所有 9 个面板中是相同的人物/物体、相同的衣服和相同的光照. 景深应逼真地变化(特写镜头中的背景虚化).

一个包含 9 个面板的专业 3x3 电影故事板网格. 该网格以全面的焦距范围展示输入图像中的特定主体/场景.

顶行: 宽广环境镜头,全视图,3/4 剪辑(膝上景).中间行: 腰部以上视图,胸部以上视图,脸部/正面特写.底行: 微距细节,低角度,高角度.

所有帧均具有照片般逼真的纹理,一致的电影级调色,以及针对所分析的主体或物体特定数量的正确构图.or

{ "任务": "分析输入图像的整体构图与主体,生成一个严格一致的3x3电影印样(Contact Sheet)故事板网格,展示同一主体/场景在不同镜头类型下的9张照片级画面。", "输入": { "图像": "待分析的单张输入图像", "环境": "输入图像所处的真实环境/场景", "主体类型": "可能为单人、群体/情侣、车辆、特定物体等" }, "分析要求": { "构图分析": [ "识别所有关键主体(人物/群体/车辆/物体)", "确定主体与环境的空间关系(前景/中景/背景)", "识别主体之间的互动与动作(对话、行走、触碰、驾驶等)", "梳理光线方向、质地、色温与整体调色", "确认相机视角与可能的高度(平视/仰视/俯视)", "估算景深与背景虚化程度(尤其在特写与大特写中)" ], "主体取舍与一致性": [ "在全部九帧中保持同一主体(同一人物/同一群体/同一车辆/同一物体)", "保持相同服装、相同造型、相同光照与同一场景", "根据主体类型调整镜头:群体保持聚合、车辆/物体需完整呈现其结构比例" ] }, "网格规格": { "布局": "3x3", "总面板数": 9, "行定义": [ "第1行:建立背景(环境与主体关系)", "第2行:核心覆盖(互动与情感)", "第3行:细节与角度(纹理与视角变化)" ], "列定义": [ "列1:更宽的环境/全身或全物", "列2:更近的半身/核心", "列3:特写/角度变化" ] }, "镜头类型": { "第1行_建立背景": [ { "序号": 1, "名称": "大远景(ELS)", "构图规则": [ "主体在广阔环境中占比很小", "强调空间尺度与地理关系", "群体/车辆/物体仍需可辨识但体量小" ], "主体适配": [ "人物:完整环境下的微小人物", "群体:整体聚合但尺度小", "车辆/物体:处于场景之中,展现所在位置" ] }, { "序号": 2, "名称": "全景(LS)", "构图规则": [ "完整主体或群体从上到下可见(头到脚/车轮到车顶/物体全结构)", "背景仍清晰呈现以建立语境" ], "主体适配": [ "人物:全身", "群体:完整团队保持在画面中", "车辆/物体:完整外形与占地尺寸" ] }, { "序号": 3, "名称": "中远景(美式镜头/四分之三)", "构图规则": [ "人物从膝盖以上;物体采用3/4视角(斜角度能看到纵深)", "兼顾主体体态与环境信息", "为后续中景过渡提供一致的取景基准" ], "主体适配": [ "人物:膝上景", "物体:3/4视角突出结构层次", "群体:缩进但保持整体性" ] } ], "第2行_核心覆盖": [ { "序号": 4, "名称": "中景(MS)", "构图规则": [ "人物腰部以上;物体锁定核心功能区域", "重点呈现互动/动作/操作", "背景开始弱化但仍可识别" ] }, { "序号": 5, "名称": "中特写(MCU)", "构图规则": [ "人物胸部以上,突出表情与交流", "物体靠近其关键面(控制区/标识区)", "景深更浅,背景虚化增强" ] }, { "序号": 6, "名称": "特写(CU)", "构图规则": [ "人物面部或物体正面局部的紧凑构图", "强调情绪/品牌/结构特征", "背景显著虚化,主体细节清晰" ] } ], "第3行_细节与角度": [ { "序号": 7, "名称": "大特写(ECU)", "构图规则": [ "聚焦关键微距细节(眼睛、手、标志、纹理)", "极浅景深,突出纹理与材质", "画面占比极高的细节主体" ] }, { "序号": 8, "名称": "低角度(仰视/虫眼)", "构图规则": [ "从地面或低位仰拍主体", "强化力量感、英雄感或体量", "背景透视线条随仰角变化" ] }, { "序号": 9, "名称": "高角度(俯视/鸟瞰)", "构图规则": [ "从上方俯拍主体", "强调空间关系与布局", "可展示主体与环境的几何组织" ] } ] }, "一致性约束": { "主体一致": "九帧均为同一主体/群体/车辆/物体", "造型一致": "相同服装、配件、涂装 or 表面特征", "光照一致": "同一光源方向、色温与强度;同一环境调色", "场景一致": "同一地点与背景元素,不变更环境", "时间一致": "同一时段的连续拍摄感", "景深真实": "随着焦距/距离变化,背景虚化合理递进(特写与大特写虚化增强)", "色彩管理": "统一的电影级调色,肤色/材质还原准确" }, "技术指导": { "焦距范围": [ "广角:ELS/LS(如24-35mm全画幅等效)", "中焦:MS/MCU(如50-85mm等效)", "长焦微距:CU/ECU(如85-135mm或微距镜头)" ], "相机位": [ "平视为基准,8/9镜头分别采用低/高机位", "3/4视角用于物体结构呈现(第3帧)" ], "对焦策略": [ "人像:眼部优先对焦(CU/ECU)", "物体:标识/控制区域优先", "群体:关键互动成员优先,景深适度覆盖" ], "取景边界": [ "人物:LS全身完整入画;MS腰上;MCU胸上;CU面部完整", "车辆/物体:LS确保完整轮廓;3/4视角展示体量与面", "群体:始终保持群体整体,不随镜头拆分成员" ], "运动/互动": [ "第4帧突出动作或互动", "其余帧在静态与动态间保持叙事连贯" ] }, "输出规范": { "类型": "JSON", "内容": [ "主体与环境分析摘要", "每一帧的镜头类型、构图要点、主体适配说明", "一致性与技术参数清单", "可用于生成或拍摄的执行指导(焦距/机位/对焦)" ], "网格呈现": "3x3故事板网格(顶行:宽广环境/全景/3/4;中行:腰上/胸上/正面特写;底行:微距细节/低角度/高角度)", "质感要求": [ "照片般逼真的纹理与细节", "统一电影级调色", "真实的景深变化与背景虚化" ] }}当高级时尚摄影遇上非遗皮影戏

Prompt:Create a 2x2 grid collage (four-panel layout) featuring Chinese shadow puppet theater fashion editorial photography:

TOP LEFT: Full-body shot showing complete costume silhouette with elaborate headdress, embroidered qipao, dramatic bell sleeves, multi-layered skirt, framed by burgundy red velvet curtains, white backlit background.

TOP RIGHT: Medium shot from chest up, clearly showing FACE with elaborate headdress, facial expression, and upper body costume details including intricate floral embroidery, translucent sleeves, beaded chest piece. MUST include clear facial features and head.

BOTTOM LEFT: Close-up portrait focusing on the elaborate headdress with black shadow puppet silhouette ornament, golden floral accessories, black tassels, facial expression with clear features.

BOTTOM RIGHT: Dynamic creative angle showing three-quarter or side view with full body, architectural composition, dramatic lighting creating strong shadows, theatrical stage presence.

All panels maintain consistent visual style: 8K resolution, editorial fashion photography, traditional Chinese color palette (deep blues/burgundy/coral/gold), theatrical stage lighting, burgundy curtain frames, white seamless background, shadow puppet-inspired costume elements.

Add "yanhua" text watermark in elegant serif font at the bottom right corner of the entire composition.

Style: High-fashion editorial photography celebrating Chinese intangible cultural heritage.生成和《疯狂动物城》角色合影的提示词

提示词:创建一张超写实的自拍照。使用我上传的图像作为人物的精确参考 - 不要修改、改变或调整我上传图像中人物的任何特征.

添加[疯狂动物城兔子警官](迪士尼角色)站在这位真实人物旁边.

场景:黑暗拥挤的电影院. 背景有大屏幕播放疯狂动物城电影场景. 电影般的灯光,温暖的环境光.

构图:自拍角度. 图像1中的真实人物(保持所有原始特征)和[角色名]一起自拍. [描述动作姿势] 两个人都清晰对焦. 超高清、8K质量、超写实摄影风格,自然光线混合屏幕光晕,浅景深.

关键:保持人物完全像和我上传图像的那样 - 不要改变她的发型、服装、配饰 or 任何面部特征. 只添加疯狂动物城角色到场景中.Y2K风格商业摄影提示词

这是一套用于AI图像生成的专业提示词文档,用于创作Y2K(千禧年)风格的时尚商业海报。

画面主题:一位20岁东亚女性坐在奔驰豪华轿车副驾驶座,身着JK风格服装,在夜晚城市灯光中打电话的侧脸场景.

视觉核心元素:

人物设定:年龄身份:20岁成年女性,东亚面孔,白皙肤色体型特征:曲线丰满,比例协调发型:长直黑发,自然光泽,略带凌乱妆容:浅棕色裸妆底,澳洲眼线风格,光泽感脸颊和唇部指甲:长而光亮的酒红色美甲特殊标识:右眼下方小巧黑色纹身,写有"yanhua"字样

服装造型白色扣子衬衫(JK风格)深色百褶短裙丝带领带及膝高筒袜

整体风格:成人日系JK写真集美学,适合全年龄观看

姿势动作坐在副驾驶座,身体微微朝向车窗一只手将手机贴在耳边(通话状态)另一只手随意放在大腿或扶手上表情冷静、略显严肃自信,专注于通话侧脸拍摄,眼神微微向前下方

场景与环境

车内设定品牌:梅赛德斯-奔驰豪华轿车内饰:黑色真皮座椅,金属银色装饰关键元素:方向盘上清晰可见的奔驰标志光源:仪表盘灯光、中控台照明

外部环境时间:夜晚背景:车窗外模糊的城市灯光,形成彩色散景效果氛围:都市夜间驾车场景,略带神秘感

摄影技术规格

相机风格模拟千禧年数码相机美学(佳能IXUS / 索尼Cyber-shot风格)视角:从驾驶座平视向副驾驶座拍摄构图:中景/四分之三身体框架

光线设计主光源:直接闪光灯,高对比度闪光阴影辅助光:柔和温暖的车内照明环境光:微凉的仪表盘灯光,窗外城市灯光散景特点:强烈清晰的Y2K闪光效果,面部和纹身高对比度

后期处理薄膜颗粒质感,细腻的Y2K数码噪点浅景深,人物清晰,背景柔和散景明亮整体色调,带淡粉紫色调皮肤渲染干净,纹身和车标细节锐利

技术参数分辨率:1200x1600(竖幅)画质:照片级写实,超细节,8K质量安全标准:仅限成人模型,无裸露,无露骨内容

20岁东亚女性、JK风格、奔驰副驾驶打电话侧脸姿势右眼下方"yanhua"纹身Y2K数码相机直闪效果夜晚城市灯光、奔驰标志清晰可见or

{ "版本": "1.0", "标题": "夜晚奔驰副驾驶JK风格电话侧脸写真", "总体描述": "一位20岁东亚成年女性坐在梅赛德斯-奔驰豪华轿车副驾驶座,夜晚城市灯光下以千禧年数码相机直闪风格拍摄的侧脸打电话场景,写真集美学,全年龄安全。", "核心元素": { "人物": { "年龄": 20, "性别": "女性", "族裔": "东亚人", "肤色": "白皙", "体型": "曲线丰满,匀称比例", "发型": "长直黑发,略微凌乱,自然光泽", "妆容": { "底妆": "浅棕色裸妆", "眼妆": "澳洲眼线风格(略微延长上眼线)", "腮红与高光": "面颊光泽", "唇部": "水润光泽轻色" }, "指甲": "长型酒红色光亮美甲", "特殊标识": { "右眼下方纹身": "黑色小型文字 yanhua" }, "表情": "冷静、略严肃自信,专注倾听", "视线方向": "侧脸,眼神微微向前下方" }, "服装": { "主题": "成人日系JK写真风格(全年龄安全)", "上衣": "白色扣子衬衫(JK风)", "领饰": "丝带领带", "下装": "深色百褶短裙", "袜子": "及膝高筒袜(深色或黑色)", "风格标签": [ "JK制服", "日系写真", "清爽简洁", "无露骨元素" ] }, "姿势与动作": { "坐姿": "端坐副驾驶座,身体微微朝向车窗", "手部动作": { "右手": "手机贴耳,处于通话状态", "左手": "自然放在大腿或扶手上" } } }, "车辆与场景": { "品牌": "梅赛德斯-奔驰", "座椅": "黑色真皮,精细纹理", "内饰装饰": "金属银色饰板,简洁豪华", "标志": "方向盘中央奔驰三叉星标志清晰可见", "时间": "夜晚", "车窗外背景": "模糊城市灯光形成彩色散景(红/蓝/黄/紫)", "氛围": "都市夜间行驶或停驻,内敛神秘感" }, "摄影构图与风格": { "相机美学": "千禧年早期数码卡片机风格(Canon IXUS / Sony Cyber-shot)", "视角": "从驾驶座平视拍向副驾驶", "构图": "中景 / 四分之三身体构图(肩到膝以上)", "相机参数偏好": { "焦距": "等效35-50mm标准视角", "景深": "浅景深,人物清晰,背景散景柔和", "对焦点": "人物侧脸(眼睛与纹身区域)" } }, "光线设计": { "主光": "相机直闪光灯,形成高对比度硬阴影与Y2K闪光质感", "辅助光": "车内中控与顶灯散射的温暖柔光", "环境光": "仪表盘偏冷光源 + 车窗外彩色夜景散射光", "光效细节": "面部与纹身高反差;发丝局部高光;指甲反光明显" }, "后期与质感": { "颗粒": "轻薄膜感 + 细腻Y2K数码噪点", "色调": "整体明亮,淡粉紫微冷调叠加轻微暖肤色", "皮肤处理": "干净但保留真实微纹理", "锐化": { "重点": "纹身文字、奔驰标志、手机边缘", "柔化": "背景散景与远处灯光" } }, "技术规格": { "分辨率": "1200x1600", "方向": "竖幅", "目标质量": "照片级写实,超细节,接近8K视觉质感强化", "安全": "无裸露、无敏感内容、全年龄可见" }, "提示词拼接": { "正面提示词": [ "20岁东亚女性", "JK制服写真", "梅赛德斯-奔驰副驾驶", "侧脸打电话", "直闪光灯Y2K", "千禧年数码相机风格", "夜晚城市灯光散景", "白色衬衫 深色百褶裙 丝带领带 及膝高筒袜", "长直微乱黑发", "浅棕裸妆 澳洲眼线", "酒红色长指甲", "右眼下方 yanhua 黑色纹身", "高对比度光影", "皮肤干净真实", "方向盘奔驰标志清晰", "淡粉紫整体色调", "浅景深 中景构图" ], "细节强化": [ "照片级写实", "光泽唇部与面颊", "金属内饰细节", "真皮座椅纹理", "指甲反光", "微乱发丝光泽", "散景灯光彩色柔化" ], "负面提示词": [ "低分辨率", "失真", "过度磨皮", "畸形", "多余肢体", "涂抹伪影", "过曝", "严重噪点", "漫画风", "夸张光晕", "眩光模糊", "不清晰纹身", "错误品牌标志" ] }, "生成用结构化提示": { "主提示": "20岁东亚女性坐在梅赛德斯-奔驰豪华轿车副驾驶,夜晚城市灯光散景,侧脸正通话,JK制服:白色扣子衬衫、深色百褶短裙、丝带领带、及膝高筒袜。长直微乱黑发,浅棕裸妆,澳洲眼线,光泽面颊与唇,酒红色长指甲. 右眼下方清晰黑色 yanhua 纹身. 方向盘奔驰标志清晰. 千禧年数码相机直闪风格,高对比度硬阴影,淡粉紫微冷整体色调,浅景深,中景四分之三身体构图,照片级写实.", "细节附加": "真皮黑色座椅与金属银色内饰质感,指甲与发丝高光,仪表盘与中控微光,外部彩色散景柔和。", "负面提示": "低分辨率, 过度磨皮, 畸形, 失真, 多余肢体, 不清晰纹身, 虚假光晕, 漫画风, 模糊品牌标志, 过曝, 过暗" }, "标签": [ "写真", "JK制服", "东亚女性", "夜景", "豪华轿车", "奔驰", "侧脸", "电话动作", "Y2K数码直闪", "城市散景", "写实摄影美学" ], "版权与安全": { "使用限制": "仅用于合规图像生成与测试,不含侵权或不当元素", "伦理声明": "人物为成年,内容健康,未包含敏感与不适宜成分" }, "可选扩展": { "相机EXIF模拟": { "品牌": "Canon", "型号": "IXUS风格虚拟", "ISO": "200-400", "快门": "1/60", "光圈": "f/2.8-f/3.5", "闪光": "强制闪光" }, "多版本变体建议": [ "改变外部散景颜色偏蓝紫", "稍微增加车内暖光以突出肤色", "替换手机为带金属边框款式", "加轻微雾化滤镜增强夜感" ] }}Turn own image in 3D Caricature Look

A highly stylized 3D caricature of (celebrity), with an oversized head, expressive facial features, and playful exaggeration. Rendered in a smooth, polished style with clean materials and soft ambient lighting. Minimal background toemphasize the character's charm and presenceor

{ "version": "1.0", "prompt_type": "3d_caricature", "subject": { "name": "celebrity", "substitution_required": true, "reference_images_required": true, "ethical_notes": "Ensure likeness rights respected; avoid defamatory exaggerations." }, "style": { "descriptor": "highly stylized 3D caricature", "influences": ["vinyl toy", "Pixar-inspired", "clean sculpt"], "exaggeration": { "head_scale_factor": 2.0, "facial_feature_emphasis": ["eyes", "eyebrows", "mouth"], "exaggeration_strength": "medium-high", "allowed_accessories": true }, "detail_level": "simplified", "surface_finish": "smooth_polished", "material_aesthetic": "soft_plastic" }, "geometry": { "topology_target": "clean_quads", "poly_density": "medium", "silhouette_priority": true, "avoid_micro_detail": true }, "materials": { "skin": { "type": "stylized", "roughness": 0.35, "subsurface_scattering": 0.15, "specular": 0.4 }, "eyes": { "glossiness": 0.9, "reflection_intent": "subtle_hdri" }, "hair": { "style": "chunked_shapes", "roughness": 0.5 }, "clothing": { "simplified_folds": true, "roughness": 0.55 } }, "color_palette": { "strategy": "harmonious", "base_tones": ["skin_natural", "neutral_clothing_base"], "accent_colors": ["personality_signature_color"], "background_color": "soft_muted_pastel" }, "lighting": { "scheme": "soft_ambient_plus_subtle_key", "key_light": { "intensity": 1.0, "softness": "high", "position": "front_upper_left" }, "fill_light": { "intensity": 0.5, "softness": "high" }, "rim_light": { "enabled": true, "intensity": 0.6, "position": "rear_side" }, "ambient_occlusion": "light", "global_illumination": true }, "background": { "type": "minimal", "style": "flat_or_subtle_gradient", "depth_elements": "none", "purpose": "emphasize_character" }, "camera": { "focal_length_mm": 65, "angle": "slight_down_tilt", "framing": "mid_torso", "perspective_exaggeration": "low" }, "expression": { "mood": "playful_charming", "facial_expression": "slight_smile_with_expressive_eyes", "eyebrow_pose": "raised_subtle" }, "render_settings": { "engine": "Cycles", "samples": 128, "denoise": true, "output_resolution": "2048x2048", "tone_mapping": "filmic_medium_contrast" }, "post_process": { "color_grading": "gentle_warm", "sharpen": "low", "vignette": "subtle" }, "constraints": { "avoid": ["harsh_shadows", "hyperreal_skin_detail", "busy_background"], "must_have": ["oversized_head", "clean_materials", "soft_lighting"] }, "quality_targets": { "silhouette_readability": "high", "expression_clarity": "high", "material_consistency": "high" }, "tags": [ "3d", "caricature", "stylized", "clean", "soft_lighting", "vinyl_toy" ], "pipeline_notes": { "modeling_phase": "block out proportions early; lock head scale before detailing", "shading_phase": "limit roughness variation to keep cohesion", "lighting_phase": "test neutral gray to confirm form readability first", "approval_check": [ "recognizable_likeness", "playful_not_unflattering", "background_not_distracting" ] }, "metadata": { "authoring_intent": "Character charm and presence over realism", "license_considerations": "Check celebrity usage rights", "status": "draft" }}电商lookbook风格图

专业运动服装产品摄影姿势指南,东亚女性模特,深灰色/黑色瑜伽套装(运动内衣+高腰紧身裤),干净摄影棚背景,柔和自然光.

拼图布局:2行×5列,每个姿势配中文说明.

10个姿势:1. 站立S形,一手插口袋,看远方2. 站立交叉腿,一手拨头发,看镜头微笑3. 靠墙抱臂,侧头看镜头4. 坐台阶,托腮视线往下5. 坐椅子大笑,身体前倾6. 走路抓拍,单手拎包带7. 回头看,手抓下摆,轻笑8. 窗边侧脸,闭眼享受光线9. 盘腿抱膝,头靠膝盖微笑10. 拿书看道具,一手摸头发

风格:电商摄影、极简美学、生活化场景、柔和阴影、自然表情、全身或3/4身构图、多角度拍摄(平视/侧面/低角度/俯视),2816x1536分辨率.or

{ "主题": "专业运动服装产品摄影姿势指南", "模特": "东亚女性", "服装": "深灰色/黑色瑜伽套装(运动内衣+高腰紧身裤)", "背景与光线": "干净摄影棚背景,柔和自然光", "拼图布局": { "行数": 2, "列数": 5, "说明": "拼图布局:2行×5列,每个姿势配中文说明。" }, "姿势列表": [ { "序号": 1, "姿势": "站立S形,一手插口袋,看远方" }, { "序号": 2, "姿势": "站立交叉腿,一手拨头发,看镜头微笑" }, { "序号": 3, "姿势": "靠墙抱臂,侧头看镜头" }, { "序号": 4, "姿势": "坐台阶,托腮视线往下" }, { "序号": 5, "姿势": "坐椅子大笑,身体前倾" }, { "序号": 6, "姿势": "走路抓拍,单手拎包带" }, { "序号": 7, "姿势": "回头看,手抓下摆,轻笑" }, { "序号": 8, "姿势": "窗边侧脸,闭眼享受光线" }, { "序号": 9, "姿势": "盘腿抱膝,头靠膝盖微笑" }, { "序号": 10, "姿势": "拿书看道具,一手摸头发" } ], "风格": [ "电商摄影", "极简美学", "生活化场景", "柔和阴影", "自然表情", "全身或3/4身构图", "多角度拍摄(平视/侧面/低角度/俯视)" ], "分辨率": "2816x1536", "镜头与构图备注": "多角度拍摄,保证姿势多样性与服装细节展示", "输出要求": "保持全部中文说明不变"}把地标装进瓶子里

用 Nano Banana Pro 玻璃罐子将你玩过记忆深刻的游戏场景封存起来; 很多人去过同一个景点,但你的体验是独一无二的. 把地标装进瓶子里.

海报设计、自媒体封面设计:查找 [在此处输入地点名称 或 经纬度坐标] 的标志性景观或建筑,并获取该地点在特定时间的天气状况.

画面的主体是一个极其精致、透亮且带有厚度感的玻璃罐子(类似于圆顶玻璃罩、标本罐或复古水晶球),稳稳地放置在一个干净、柔和的平面上. 玻璃罐子内部,封存着该地点代表性景观的Q版微缩模型. 模型材质呈现出高级的软润感(类似软陶或磨砂树脂),色彩治愈. 在罐内景观的上方,悬浮着对应天气的微缩模型(例如:Q版棉花糖般的云朵、发光的小太阳、或几滴晶莹的雨滴).

风格为梦工厂动画风格,3D建模,光线极为柔和梦幻. 强调玻璃材质的真实感,光线透过玻璃罐产生漂亮的折射、反光和焦散效果,让内部的景物显得更加珍贵. 采用强烈的移轴摄影镜头效果,焦点清晰地集中在玻璃罐内的微缩景观上,罐子外部和背景完全虚化模糊.

画面周围大面积留白,保持干净高级感. 画面底部居中位置,使用无衬线体小字清晰标注:位置信息(地点名称及具体的经纬度)、天气图标及温度、时间,以及一段关于这个旅行地点的精解中文介绍文案(侧重于描述记忆或氛围). 高品质画面输出,细节丰富惊人.城市3D风格时代变迁

https://x.com/servasyy/status/1995412825003708860?s=20

Prompt:A stunning hyper-realistic 3D render of architectural evolution displayed as a detailed miniature model diorama on a large circular floating platform, like a round disc divided into four distinct quadrants. The entire composition acts as a physical timeline. ALL structures and landscape elements are rendered as tangible, tactile 3D miniature models with extreme physical depth, dimension, and craftsmanship textures, not flat backgrounds. The circular platform has thick layered edges resembling geological strata in shades of earthen browns, ancient stone, and deep water blues.

First quadrant (top-left, The Ancient Origins): 3D miniature models representing the earliest vernacular architecture and traditional dwellings historically defining this location. Buildings feature historically accurate materials (e.g., ancient timber, stone, mudbrick, traditional roofing), tiny period-appropriate transportation methods (horses, carriages, ancient boats, or foot travel), and miniature human figures in traditional historical attire. The era represented is pre-modernization.

Second quadrant (top-right, The Golden Age/Historic Landmarks): 3D miniature models representing the most iconic, widely recognized historical landmarks and classic architecture from the location's most significant historical era (e.g., colonial, renaissance, industrial revolution, or dynasty peaks). These landmark structures are rendered with incredible detail. Tiny vehicles from a mid-20th-century or equivalent historical period, and miniature figures in era-specific clothing.

Third quadrant (bottom-right, The Modern Metropolis): 3D miniature models of the current, contemporary skyline and urban density. Features the tallest, most recognizable modern skyscrapers and engineering marvels that define the location today. Glass facades, steel structures, tiny contemporary cars, buses, and modern public transport systems, miniature contemporary urban figures.

Fourth quadrant (bottom-left, The Future Vision): 3D miniature models of speculative future architecture suited to this specific location's environment. Includes sustainable design, vertical gardens, organic parametric structures, advanced renewable energy integration, futuristic elevated transport networks, tiny autonomous flying vehicles and drones, miniature futuristic figures in advanced attire.

Environment & Atmosphere: The circular platform floats above a realistic 3D rendered body of water characteristic of the location (a major river, harbor, bay, or lake) with reflections. Traditional local watercraft transform across the quadrants into sleek modern and then futuristic vessels. The background features atmospheric, misty silhouettes of the location's distinct geography transitioning from warm dawn light, through twilight, to a deep night sky with stars.

Lighting Transition: Lighting transitions dynamically across the quadrants: Warm sepia and candlelight tone for the Ancient section; Golden hour sunlight for the Historic section; Vibrant electric city neon for the Modern section; Holographic cyan-magenta glow for the Future section.

Typography: At top center: Elegant bilingual typography displaying the location's name in both English and its native script (combining traditional calligraphy with modern sans-serif fonts), followed by the subtitle "Architectural Journey Through Time".

Technical Specs: Ultra-realistic 3D rendering style, professional architectural miniature photography, strong tilt-shift lens effect creating a shallow depth of field and a convincing miniature appearance. All elements have physical 3D depth. 4K resolution, museum-quality diorama presentation, dramatic studio lighting with depth and atmosphere.

GENERATE THE ARCHITECTURAL EVOLUTION DIORAMA FOR THE SPECIFIC LOCATION AND STYLE OF:类洗涤文明与洗衣机进化3D渲染图

一幅令人惊叹的超写实3D渲染图,呈现人类洗涤文明与洗衣机进化的演变过程. 构图结构: 这不是分割的圆盘,而是一条蜿蜒流动的S形微缩景观长卷,漂浮在虚空之中. 它像一条巨大的洁净水流与丝绸织物交织的纽带,从画面的左下角(原始)蜿蜒延伸至右上角(未来),展现出无缝衔接的技术流动感. 底座材质由粗糙的河岸青石逐渐过渡到光滑的陶瓷,最后变成悬浮的液态金属.

场景流转(从左至右):

起源端(左下方 - 河畔捣衣): 景观始于自然的溪流河畔. 精细的3D微缩模型展示了古代妇女在青石板上使用木制捣衣杵(棒槌)敲打衣物的场景. 旁边散落着木桶和皂角. 水流清澈,带着泡沫. 色调是自然的阳光色与古朴的木纹色,充满生活气息.

机械段(中段 - 蒸汽与齿轮): 随着时代推进,场景进入工业革命时期. 核心焦点是复古的手摇式木桶洗衣机和早期的电动搅拌式洗衣机(带有把衣服压干的滚轴). 背景是铺着黑白格瓷砖的老式自助洗衣房,黄铜管道喷着蒸汽. 微缩的技工正在调试巨大的齿轮装置. 这一段笼罩在温暖的钨丝灯光与蒸汽白雾之中,带有怀旧的机械美感.

现代段(中后段 - 滚筒与智能): 景观全面现代化,充满科技感. 视觉中心是几台解剖视角的现代直驱滚筒洗衣机和洗烘套装,内筒不仅在旋转,还能看到彩色的水流和气泡在其中通过复杂的路径循环. 周围是极简主义的现代阳台场景,微缩的一家三口在通过手机APP远程操控. 灯光转变为明亮的LED白光与触摸屏的科技蓝,体现洁净与效率.

未来端(右上方 - 分子净化): 长卷的顶端指向未来,洗衣机的形态发生了质变,不再有“桶”的概念. 未来的洗涤设备像透明的胶囊或悬浮的光环,衣物悬浮在其中,通过超声波、紫外线或空气负离子进行无水洗涤. 微缩的衣物纤维在微观视角下瞬间恢复平整. 背景是纯净的全息蓝绿色与半透明材质,象征零污染.

环境与元素: 一条半透明的蓝色水流和漂浮的白色泡沫贯穿整个长卷的边缘,将不同时代的洗涤方式串联起来. 背景是不同材质的衣物纹理剪影(从粗麻到丝绸再到纳米面料),随着时代推移,纹理从粗糙变得极其细腻光滑.

技术规格: 超写实3D渲染风格,强烈的移轴镜头效果(Tilt-shift)营造出微缩世界的精致感. 全景深,4K分辨率. 光线处理非常关键,需要做成线性渐变光:从左侧的自然暖阳,平滑过渡到中间的复古暖黄,最后融入右侧冷冽的未来科技蓝光.

文字排版: 图片底部中央悬浮着立体文字:中文“净界”(现代黑体)与英文“EVOLUTION”,辅以小字“从捣衣杵到分子洗涤”。尼克教动作动词

# 描述《疯狂动物城》角色尼克·王尔德动作的教育海报title = "ZOOTOPIA ACTION VERBS"description = "一张教育海报,使用《疯狂动物城》角色尼克·王尔德(Nick Wilde)的形象来展示12个常见的英语动作动词,这张教育海报的阅读对象是不满6岁的非英语国家儿童,因此每个单词对应的尼克·王尔德形象都需要生动、传神,可以添加其他元素来辅助。"theme = "英语动词学习"character = "尼克·王尔德 (Nick Wilde)"# 图像布局信息[layout]rows = 3columns = 4total_panels = 12唐代宫廷乐队

{ "subject": { "description": "A Tang dynasty Chinese court orchestra performing music on a branch of an Agan tree, with musicians playing pipa, erhu, flute, ruan, and horse-hoof lute, musicians and birds casually scattered, some standing, some sitting.", "mirror_rules": null, "age": null, "expression": { "eyes": { "look": null, "energy": null, "direction": null }, "mouth": { "position": null, "energy": null }, "overall": null }, "face": { "preserve_original": false, "makeup": null }, "hair": { "color": null, "style": null, "effect": null }, "body": { "frame": null, "waist": null, "chest": null, "legs": null, "skin": { "visible_areas": null, "tone": null, "texture": null, "lighting_effect": null } }, "pose": { "position": "mixed (some standing, some sitting)", "base": "on a branch of an Agan tree", "overall": "performing music, playing pipa, erhu, flute, ruan, and horse-hoof lute" }, "clothing": { "top": { "type": "Tang dynasty court clothing", "color": null, "details": null, "effect": null }, "bottom": { "type": null, "color": null, "details": null } } }, "accessories": { "jewelry": null, "headwear": null, "device": null, "prop": "pipa, erhu, flute, ruan, horse-hoof lute" }, "photography": { "camera_style": null, "angle": null, "shot_type": null, "aspect_ratio": null, "texture": null, "lighting": "even soft gentle lighting", "depth_of_field": null }, "background": { "setting": "camel-brown stage canvas", "wall_color": "camel brown stage canvas, color code #E7B5C3D", "elements": [ "Agan tree branch", "birds" ], "atmosphere": null, "lighting": "even soft gentle lighting" }, "the_vibe": { "energy": null, "mood": null, "aesthetic": "Song dynasty aesthetics, minimalist, realistic", "authenticity": null, "intimacy": null, "story": "A Tang dynasty Chinese court orchestra performing music on a branch of an Agan tree, musicians and birds casually scattered, some standing, some sitting.", "caption_energy": "Tang court orchestra on a tree branch" }, "constraints": { "must_keep": [ "Tang dynasty court orchestra", "musicians playing pipa, erhu, flute, ruan, and horse-hoof lute", "Agan tree branch", "musicians and birds casually scattered, some standing, some sitting", "camel-brown stage canvas with color code #E7B5C3D" ], "avoid": [] }, "negative_prompt": [ "nsfw", "low quality", "text", "watermark" ]}State-Of-The-Art Prompting For AI Agents

Full talk: State-Of-The-Art Prompting For AI Agents

Here’s a summary of key prompt engineering techniques used by some of the best AI startups:

- Be Hyper-Specific & Detailed (The “Manager” Approach):

- Summary: Treat your LLM like a new employee. Provide very long, detailed prompts that clearly define their role, the task, the desired output, and any constraints.- Example: Paraphelp's customer support agent prompt is 6+ pages, meticulously outlining instructions for managing tool calls.2. Assign a Clear Role (Persona Prompting):

- Summary: Start by telling the LLM who it is (e.g., "You are a manager of a customer service agent," "You are an expert prompt engineer"). This sets the context, tone, and expected expertise.- Benefit: Helps the LLM adopt the desired style and reasoning for the task.3. Outline the Task & Provide a Plan:

- Summary: Clearly state the LLM's primary task (e.g., "Your task is to approve or reject a tool call..."). Break down complex tasks into a step-by-step plan for the LLM to follow.- Benefit: Improves reliability and makes complex operations more manageable for the LLM.4. Structure Your Prompt (and Expected Output):

- Summary: Use formatting like Markdown (headers, bullet points) or even XML-like tags to structure your instructions and define the expected output format.- Example: Paraphelp uses XML-like tags like `<manager_verify>accept</manager_verify>` for structured responses.- Benefit: Makes it easier for the LLM to parse instructions and generate consistent, machine-readable output.5. Meta-Prompting (LLM, Improve Thyself!):

- Summary: Use an LLM to help you write or refine your prompts. Give it your current prompt, examples of good/bad outputs, and ask it to "make this prompt better" or critique it.- Benefit: LLMs know "themselves" well and can often suggest effective improvements you might not think of.- Query successful6. Provide Examples (Few-Shot & In-Context Learning):

- Summary: For complex tasks or when the LLM needs to follow a specific style or format, include a few high-quality examples of input-output pairs directly in the prompt.- Example: Jazzberry (AI bug finder) feeds hard examples to guide the LLM.- Benefit: Significantly improves the LLM's ability to understand and replicate desired behavior.7. Prompt Folding & Dynamic Generation: